11.2. Storage Devices

Systems designers classify devices in the

memory hierarchy

according to how programs access their data. Primary storage devices can be

accessed directly by a program on the CPU. That is, the CPU’s assembly

instructions encode the exact location of the data that the instructions should

retrieve. Examples of primary storage include CPU registers and main memory

(RAM), which assembly instructions reference directly (for example, in IA32

assembly as %reg and (%reg), respectively).

In contrast, CPU instructions cannot directly refer to secondary storage devices. To access the contents of a secondary storage device, a program must first request that the device copy its data into primary storage (typically memory). The most familiar types of secondary storage devices are disk devices such as hard disk drives and solid-state drives, which persistently store file data. Other examples include floppy disks, magnetic tape cartridges, or even remote file servers.

Even though you may not have considered the distinction between primary and secondary storage in these terms before, it’s likely that you have encountered their differences in programs already. For example, after declaring and assigning ordinary variables (primary storage), a program can immediately use them in arithmetic operations. When working with file data (secondary storage), the program must read values from the file into memory variables before it can access them.

Several other important criteria for classifying memory devices arise from their performance and capacity characteristics. The three most interesting measures are:

-

Capacity: The amount of data a device can store. Capacity is typically measured in bytes.

-

Latency: The amount of time it takes for a device to respond with data after it has been instructed to perform a data retrieval operation. Latency is typically measured in either fractions of a second (for example, milliseconds or nanoseconds) or CPU cycles.

-

Transfer rate: The amount of data that can be moved between the device and main memory over some interval of time. Transfer rate is also known as throughput and is typically measured in bytes per second.

Exploring the variety of devices in a modern computer reveals a huge disparity in device performance across all three of these measures. The performance variance primarily arises from two factors: distance and variations in the technologies used to implement the devices.

Distance contributes because, ultimately, any data that a program wants to use must be available to the CPU’s arithmetic components, the ALU, for processing. CPU designers place registers close to the ALU to minimize the time it takes for a signal to propagate between the two. Thus, while registers can store only a few bytes and there aren’t many of them, the values stored are available to the ALU almost immediately! In contrast, secondary storage devices like disks transfer data to memory through various controller devices that are connected by longer wires. The extra distance and intermediate processing slows down secondary storage considerably.

The underlying technology also significantly affects device performance. Registers and caches are built from relatively simple circuits, consisting of just a few logic gates. Their small size and minimal complexity ensures that electrical signals can propagate through them quickly, reducing their latencies. On the opposite end of the spectrum, traditional hard disks contain spinning magnetic platters that store hundreds of gigabytes. Although they offer dense storage, their access latency is relatively high due to the requirements of mechanically aligning and rotating components into the correct positions.

The remainder of this section examines the details of primary and secondary storage devices and analyzes their performance characteristics.

11.2.1. Primary Storage

Primary storage devices consist of random access memory (RAM), which means the time it takes to access data is not affected by the data’s location in the device. That is, RAM doesn’t need to worry about things like moving parts into the correct position or rewinding tape spools. There are two widely used types of RAM, static RAM (SRAM) and dynamic RAM (DRAM), and both play an important role in modern computers. Table 1 characterizes the performance measures of common primary storage devices and the types of RAM they use.

| Device | Capacity | Approx. latency | RAM type |

|---|---|---|---|

Register |

4 - 8 bytes |

< 1 ns |

SRAM |

CPU cache |

1 - 32 megabytes |

5 ns |

SRAM |

Main memory |

4 - 64 gigabytes |

100 ns |

DRAM |

SRAM stores data in small electrical circuits (for example, latches). SRAM is typically the fastest type of memory, and designers integrate it directly into a CPU to build registers and caches. SRAM is relatively expensive in its cost to build, cost to operate (power consumption), and in the amount of space it occupies. Collectively, those costs limit the amount of SRAM storage that a CPU can include.

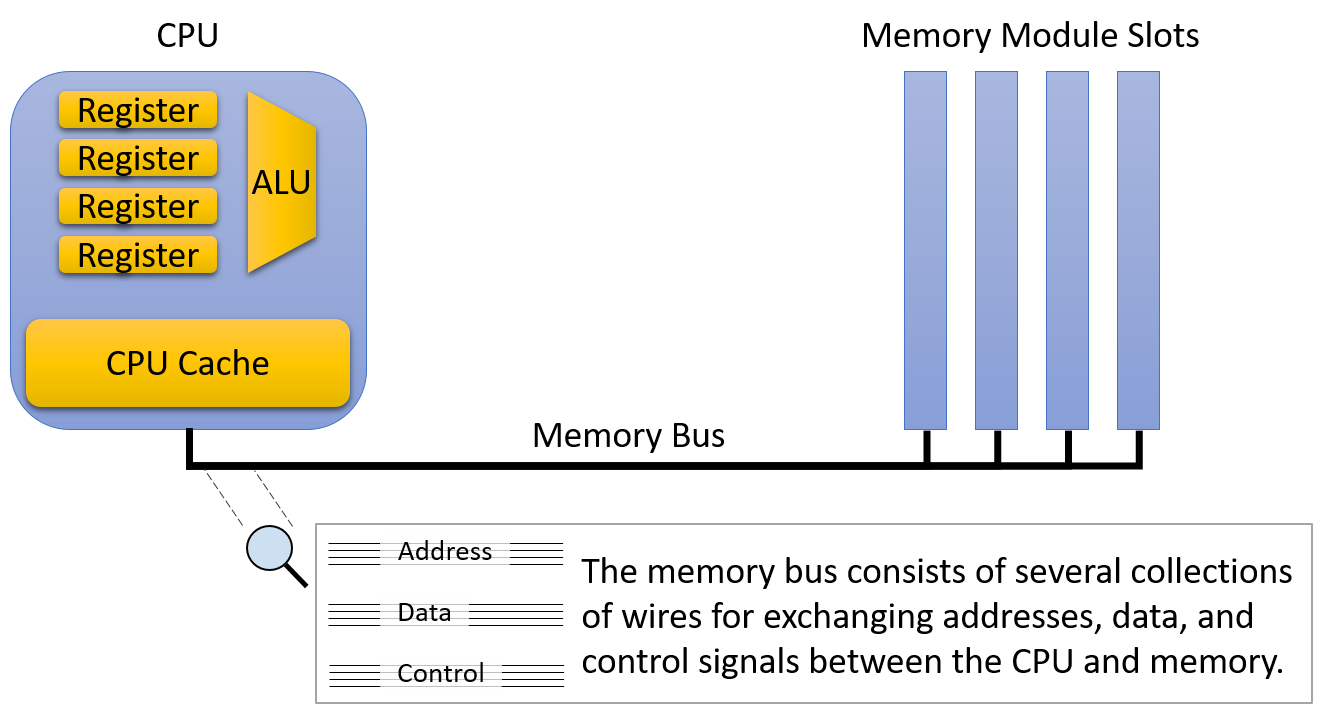

DRAM stores data using electrical components called capacitors that hold an electrical charge. It’s called "dynamic" because a DRAM system must frequently refresh the charge of its capacitors to maintain a stored value. Modern systems use DRAM to implement main memory on modules that connect to the CPU via a high-speed interconnect called the memory bus.

Figure 1 illustrates the positions of primary storage devices relative to the memory bus. To retrieve a value from memory, the CPU puts the address of the data it would like to retrieve on the memory bus and signals that the memory modules should perform a read. After a short delay, the memory module sends the value stored at the requested address across the bus to the CPU.

Even though the CPU and main memory are physically just a few inches away from each other, data must travel through the memory bus when it moves between the CPU and main memory. The extra distance and circuitry between them increases the latency and reduces the transfer rate of main memory relative to on-CPU storage. As a result, the memory bus is sometimes referred to as the von Neumann bottleneck. Of course, despite its lower performance, main memory remains an essential component because it stores several orders of magnitude more data than can fit on the CPU. Consistent with other forms of storage, there’s a clear trade-off between capacity and speed.

CPU cache (pronounced "cash") occupies the middle ground between registers and main memory, both physically and in terms of its performance and capacity characteristics. A CPU cache typically stores a few kilobytes to megabytes of data directly on the CPU, but physically, caches are not quite as close to the ALU as registers. Thus, caches are faster to access than main memory, but they require a few more cycles than registers to make data available for computation.

Rather than the programmer explicitly loading values into the cache, control circuitry within the CPU automatically stores a subset of the main memory’s contents in the cache. CPUs strategically control which subset of main memory they store in caches so that as many memory requests as possible can be serviced by the (much higher performance) cache. Later sections of this chapter describe the design decisions that go into cache construction and the algorithms that should govern which data they store.

Real systems incorporate multiple levels of caches that behave like their own miniature version of the memory hierarchy. That is, a CPU might have a very small and fast L1 cache that stores a subset of a slightly larger and slower L2 cache, which in turns stores a subset of a larger and slower L3 cache. The remainder of this section describes a system with just a single cache, but the interaction between caches on a real system behaves much like the interaction between a single cache and main memory detailed later.

|

If you’re curious about the sizes of the caches and main memory on your system,

the |

11.2.2. Secondary Storage

Physically, secondary storage devices connect to a system even farther away from the CPU than main memory. Compared to most other computer equipment, secondary storage devices have evolved dramatically over the years, and they continue to exhibit more diverse designs than other components. The iconic punch card allowed a human operator to store data by making small holes in a thick piece of paper, similar to an index card. Punch cards, whose design dates back to the U.S. census of 1890, faithfully stored user data (often programs) through the 1960s and into the 1970s.

A tape drive stores data on a spool of magnetic tape. Although they generally offer good storage density (lots of information in a small size) for a low cost, tape drives are slow to access because they must wind the spool to the correct location. Although most computer users don’t encounter them often anymore, tape drives are still frequently used for bulk storage operations (for example, large data backups) in which reading the data back is expected to be rare. Modern tape drives arrange the magnetic tape spool into small cartridges for ease of use.

Removable media like floppy disks and optical discs are another popular form of secondary storage. Floppy disks contain a spindle of magnetic recording media that rotates over a disk head that reads and writes its contents. Figure 2 shows photos of a punch card, a tape drive, and a floppy disk. Optical discs such as CD, DVD, and Blu-ray store information via small indentations on the disc. The drive reads a disc by shining a laser at it, and the presence or absence of indentations causes the beam to reflect (or not), encoding zeros and ones.

Modern Secondary Storage

| Device | Capacity | Latency | Transfer rate |

|---|---|---|---|

Flash disk |

0.5 - 2 terabytes |

0.1 - 1 ms |

200 - 3,000 megabytes / second |

Traditional hard disk |

0.5 - 10 terabytes |

5 - 10 ms |

100 - 200 megabytes / second |

Remote network server |

Varies considerably |

20 - 200 ms |

Varies considerably |

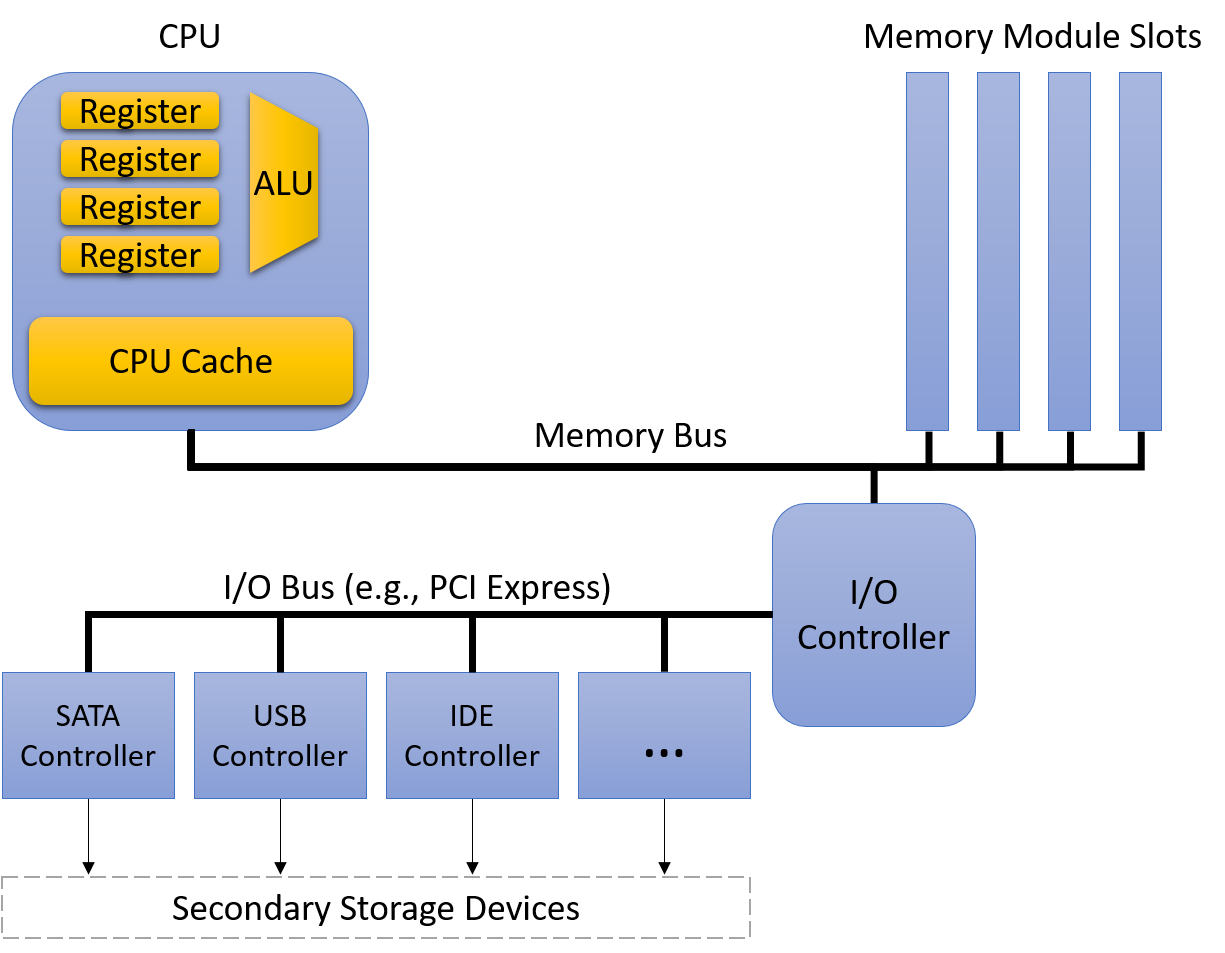

Table 2 characterizes the secondary storage devices commonly available to workstations today. Figure 3 displays how the path from secondary storage to main memory generally passes through several intermediate device controllers. For example, a typical hard disk connects to a Serial ATA controller, which connects to the system I/O controller, which in turn connects to the memory bus. These intermediate devices make disks easier to use by abstracting the disk communication details from the OS and programmer. However, they also introduce transfer delays as data flows through the additional devices.

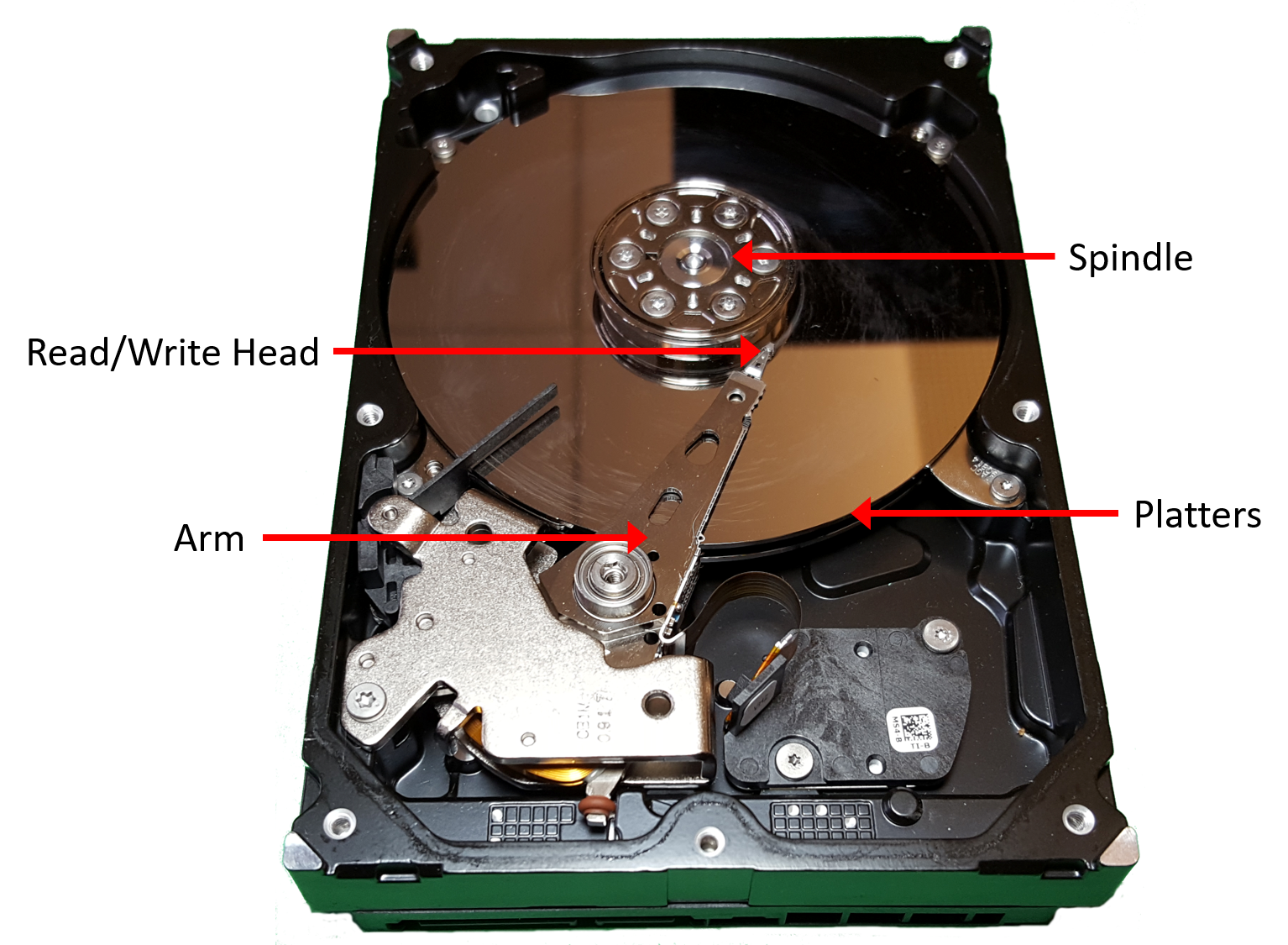

The two most common secondary storage devices today are hard disk drives (HDDs) and flash-based solid-state drives (SSDs). A hard disk consists of a few flat, circular platters made from a material that allows for magnetic recording. The platters rotate quickly, typically at speeds between 5,000 and 15,000 revolutions per minute. As the platters spin, a small mechanical arm with a disk head at the tip moves across the platter to read or write data on concentric tracks (regions of the platter located at the same diameter).

Figure 4 illustrates the major components of a hard disk. Before accessing data, the disk must align the disk head with the track that contains the desired data. Alignment requires extending or retracting the arm until the head sits above the track. Moving the disk arm is called seeking, and because it requires mechanical motion, seeking introduces a small seek time delay to accessing data (a few milliseconds). When the arm is in the correct position, the disk must wait for the platter to rotate until the disk head is directly above the location that stores the desired data. This introduces another short delay (a few more milliseconds), known as rotational latency. Thus, due to their mechanical characteristics, hard disks exhibit significantly higher access latencies than the primary storage devices described earlier.

In the past few years, SSDs, which have no moving parts (and thus lower latency) have quickly risen to prominence. They are known as solid-state drives because they don’t rely on mechanical movement. Although several solid-state technologies exist, flash memory reigns supreme in commercial SSD devices. The technical details of flash memory are beyond the scope of this book, but it suffices to say that flash-based devices allow for reading, writing, and erasing data at speeds faster than traditional hard disks. Though they don’t yet store data as densely as their mechanical counterparts, they’ve largely replaced spinning disks in most consumer devices like laptops.