Dive into Systems

Authors

Suzanne J. Matthews, Ph.D. — West Point

suzanne.matthews@westpoint.edu

Tia Newhall, Ph.D. — Swarthmore College

newhall@cs.swarthmore.edu

Kevin C. Webb, Ph.D. — Swarthmore College

kwebb@cs.swarthmore.edu

Book Version

Dive into Systems — Version 1.2

Copyright

© 2020 Dive into Systems, LLC

License: CC BY-NC-ND 4.0

This work is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International.

Disclaimer

The authors made every effort to ensure that the information in this book was correct. The programs in this book have been included for instructional purposes only. The authors do not offer any warranties with respect to the programs or contents of this book. The authors do not assume and hereby disclaim any liability to any party for any loss, damage, or disruption caused by errors or omissions, whether such errors or omissions result from negligence, accident, or any other cause.

The views expressed in this book are those of the authors and do not reflect the official policy or position of the Department of the Army, Department of Defense, or the U.S. Government.

Acknowledgements

The authors would like to acknowledge the following individuals for helping make Dive into Systems a success:

Formal Reviewers

Each chapter in Dive into Systems was peer-reviewed by several CS professors around the United States. We are extremely grateful to those faculty who served as formal reviewers. Your insight, time, and recommendations have improved the rigor and precision of Dive into Systems. Specifically, we would like to acknowledge the contributions of:

-

Jeannie Albrecht (Williams College) for her review and feedback on Chapter 15.

-

John Barr (Ithaca College) for his review and feedback on chapters 6, 7, and 8, and providing general advice for the x86_64 chapter.

-

Jon Bentley for providing review and feedback on section 5.1, including line-edits.

-

Anu G. Bourgeois (Georgia State University) for her review and feedback on Chapter 4.

-

Martina Barnas (Indiana University Bloomington) for her review and insightful feedback on Chapter 14, especially section 14.4.

-

David Bunde (Knox College) for his review, comments and suggestions on Chapter 14.

-

Stephen Carl (Sewanee: The University of the South) for his careful review and detailed feedback on chapters 6 and 7.

-

Bryan Chin (U.C. San Diego) for his insightful review of the ARM assembly chapter (chapter 9).

-

Amy Csizmar Dalal (Carleton College) for her review and feedback on Chapter 5.

-

Debzani Deb (Winston-Salem State University) for her review and feedback on Chapter 11.

-

Saturnino Garcia (University of San Diego) for his review and feedback on Chapter 5.

-

Tim Haines (University of Wisconsin) for his comments and review of Chapter 3.

-

Bill Jannen (Williams College) for his detailed review and insightful comments on Chapter 11.

-

Ben Marks (Swarthmore College) for comments on chapters 1 and 2.

-

Alexander Mentis (West Point) for insightful comments and line-edits of early drafts of this book.

-

Rick Ord (U.C. San Diego) for his review and suggested corrections for the Preface, and reviewing over 60% (!!) of the book, including chapters 0, 1, 2, 3, 4, 6, 7, 8 and 14. His feedback has helped us keep our notation and code consistent over the different chapters!

-

Joe Politz (U.C. San Diego) for his review and detailed suggestions for strengthening Chapter 12.

-

Brad Richards (University of Puget Sound) for his rapid feedback and suggestions for Chapter 12.

-

Kelly Shaw (Williams College) for her review and suggestions for Chapter 15.

-

Simon Sultana (Fresno Pacific University) for his review and suggested corrections for Chapter 1.

-

Cynthia Taylor (Oberlin College) for her review and suggested corrections of Chapter 13.

-

David Toth (Centre College) for his review and suggested corrections for Chapters 2 and 14.

-

Bryce Wiedenbeck (Davidson College) for his review and suggested corrections for Chapter 4.

-

Daniel Zingaro (University of Toronto Mississauga) for catching so many typos.

Additional Feedback

The following people caught random typos and other sundries. We are grateful for your help in finding typos!

-

Tanya Amert (Denison University)

-

Ihor Beliuha

-

Christiaan Biesterbosch

-

Daniel Canas (Wake Forest University)

-

Chien-Chung Shen (University of Delaware)

-

Vasanta Chaganti (Swarthmore College)

-

Stephen Checkoway (Oberlin College)

-

John DeGood (The College of New Jersey)

-

Joe Errey

-

Artin Farahani

-

Sat Garcia (University of San Diego)

-

Aaron Gember-Jacobson (Colgate University)

-

Stephen Gilbert

-

Arina Kazakova (Swarthmore College)

-

Deborah Knox (The College of New Jersey)

-

Kevin Lahey (Colgate University)

-

Raphael Matchen

-

Sivan Nachaum (Smith College)

-

Aline Normolye (Bryn Mawr College)

-

SaengMoung Park (Swarthmore College)

-

Rodrigo Piovezan (Swarthmore College)

-

Roy Ragsdale (West Point) who gave advice for restructuring the guessing game for the ARM buffer overflow exploit in chapter 9.

-

Zachary Robinson (Swarthmore College)

-

Joel Sommers (Colgate University)

-

Peter Stenger

-

Richard Weiss (Evergreen State College)

-

David Toth (Centre College)

-

Alyssa Zhang (Swarthmore College)

Early Adopters

An alpha release of Dive into Systems was piloted at West Point in Fall 2018; The beta release of the textbook was piloted at West Point and Swarthmore College in Spring 2019. In Fall 2019, Dive into Systems launched its Early Adopter Program, which enabled faculty around the United States to pilot the stable release of Dive into Systems at their institutions. The Early Adopter Program is a huge help to the authors, as it helps us get valuable insight into student and faculty experiences with the textbook. We use the feedback we receive to improve and strengthen the content of Dive into Systems, and are very thankful to everyone who completed our student and faculty surveys.

2019-2020 Early Adopters

The following individuals piloted Dive into Systems as a textbook at their institutions during the Fall 2019- Spring 2020 Academic Year:

-

John Barr (Ithaca College) - Computer Organization & Assembly Language (Comp 210)

-

Chris Branton (Drury University) - Computer Systems Concepts (CSCI 342)

-

Dick Brown (St. Olaf College) - Hardware Design (CSCI 241)

-

David Bunde (Knox College) - Introduction to Computing Systems (CS 214)

-

Bruce Char (Drexel University) - Systems Programming (CS 283)

-

Vasanta Chaganti (Swarthmore College) - Introduction to Computer Systems (CS 31)

-

Bryan Chin (U.C. San Diego) - Computer Organization and Systems Programming (CSE 30)

-

Stephen Carl (Sewanee: The University of the South) - Computer Systems and Organization (CSci 270)

-

John Dougherty (Haverford College) - Computer Organization (cs240)

-

John Foley (Smith College) - Operating Systems (CSC 262)

-

Elizabeth Johnson (Xavier University) - Programming in C

-

Alexander Kendrowitch (West Point) - Computer Organization (CS380)

-

Bill Kerney (Clovis Community College) - Assembly Programming (CSCI 45)

-

Deborah Knox (The College of New Jersey) - Computer Architecture (CSC 325)

-

Doug MacGregor (Western Colorado University) - Operating Systems/Architecture (CS 330)

-

Jeff Matocha (Ouachita Baptist University) - Computer Organization (CSCI 3093)

-

Keith Muller (U.C. San Diego) - Computer Organization and Systems Programming (CSE 30)

-

Crystal Peng (Park University) - Computer Architecture (CS 319)

-

Leo Porter (U.C. San Diego) - Introduction to Computer Architecture (CSE 141)

-

Lauren Provost (Simmons University) - Computer Architecture and Organization (CS 226)

-

Kathleen Riley (Bryn Mawr College) - Principles of Computer Organization (CMSC B240)

-

Roger Shore (High Point University) - Computer Systems (CSC-2410)

-

Tony Tong (Wheaton College, Norton MA) - Advanced Topics in Computer Science: Parallel and Distributed Computing (COMP 398)

-

Brian Toone (Samford University) - Computer Organization and Architecture (COSC 305)

-

David Toth (Centre College) - Systems Programming (CSC 280)

-

Bryce Wiedenbeck (Davidson College) - Computer Organization (CSC 250)

-

Richard Weiss (The Evergreen State College) - Computer Science Foundations: Computer Architecture (CSF)

Preface

In today’s world, much emphasis is placed on learning to code, and programming is touted as a golden ticket to a successful life. Despite all the code boot camps and programming being taught in elementary schools, the computer itself is often treated as an afterthought — it’s increasingly becoming invisible in the discussions of raising the next generations of computer scientists.

The purpose of this book is to give readers a gentle yet accessible introduction to computer systems. To write effective programs, programmers must understand a computer’s underlying subsystems and architecture. However, the expense of modern textbooks often limits their availability to the set of students that can afford them. This free online textbook seeks to make computer systems concepts accessible to everyone. It is targeted toward students with an introductory knowledge of computer science who have some familiarity with Python. If you’re looking for a free book to introduce you to basic computing principles in Python, we encourage you to read How To Think Like a Computer Scientist with Python first.

If you’re ready to proceed, please come in — the water is warm!

What This Book Is About

Our book is titled Dive into Systems and is meant to be a gentle introduction to topics in computer systems, including C programming, architecture fundamentals, assembly language, and multithreading. The ocean metaphor is very fitting for computer systems. As modern life is thought to have risen from the depths of the primordial ocean, so has modern programming risen from the design and construction of early computer architecture. The first programmers studied the hardware diagrams of the first computers to create the first programs.

Yet as life (and computing) began to wander away from the oceans from which they emerged, the ocean began to be perceived as a foreboding and dangerous place, inhabited by monsters. Ancient navigators used to place pictures of sea monsters and other mythical creatures in the uncharted waters. Here be dragons, the text would warn. Likewise, as computing has wandered ever further away from its machine-level origins, computer systems topics have often emerged as personal dragons for many computing students.

In writing this book, we hope to encourage students to take a gentle dive into computer systems topics. Even though the sea may look like a dark and dangerous place from above, there is a beautiful and remarkable world to be discovered for those who choose to peer just below the surface. So too can a student gain a greater appreciation for computing by looking below the code and examining the architectural reef below.

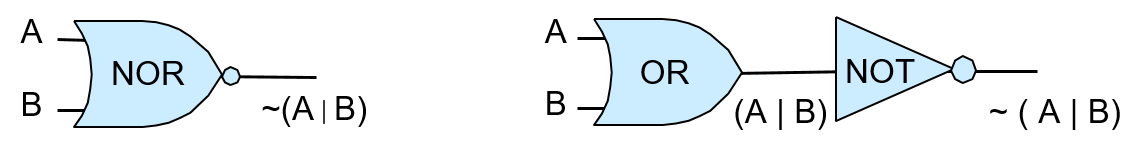

We are not trying to throw you into the open ocean here. Our book assumes only a CS1 knowledge and is designed to be a first exposure to many computer systems topics. We cover topics such as C programming, logic gates, binary, assembly, the memory hierarchy, threading, and parallelism. Our chapters are written to be as independent as possible, with the goal of being widely applicable to a broad range of courses.

Lastly, a major goal for us writing this book is for it to be freely available. We want our book to be a living document, peer reviewed by the computing community, and evolving as our field continues to evolve. If you have feedback for us, please drop us a line. We would love to hear from you!

Ways to Use This Book

Our textbook covers a broad range of topics related to computer systems, specifically targeting intermediate-level courses such as introduction to computer systems or computer organization. It can also be used to provide background reading for upper-level courses such as operating systems, compilers, parallel and distributed computing, and computer architecture.

This textbook is not designed to provide complete coverage of all systems topics. It does not include advanced or full coverage of operating systems, computer architecture, or parallel and distributed computing topics, nor is it designed to be used in place of textbooks devoted to advanced coverage of these topics in upper-level courses. Instead, it focuses on introducing computer systems, common themes in systems in the context of understanding how a computer runs a program, and how to design programs to run efficiently on systems. The topic coverage provides a common knowledge base and skill set for more advanced study in systems topics.

Our book’s topics can be viewed as a vertical slice through a computer. At the lowest layer we discuss binary representation of programs and circuits designed to store and execute programs, building up a simple CPU from basic gates that can execute program instructions. At the next layer we introduce the operating system, focusing on its support for running programs and for managing computer hardware, particularly on the mechanisms of implementing multiprogramming and virtual memory support. At the highest layer, we present the C programming language and how it maps to low-level code, how to design efficient code, compiler optimizations, and parallel computing. A reader of the entire book will gain a basic understanding of how a program written in C (and Pthreads) executes on a computer and, based on this understanding, will know some ways in which they can change the structure of their program to improve its performance.

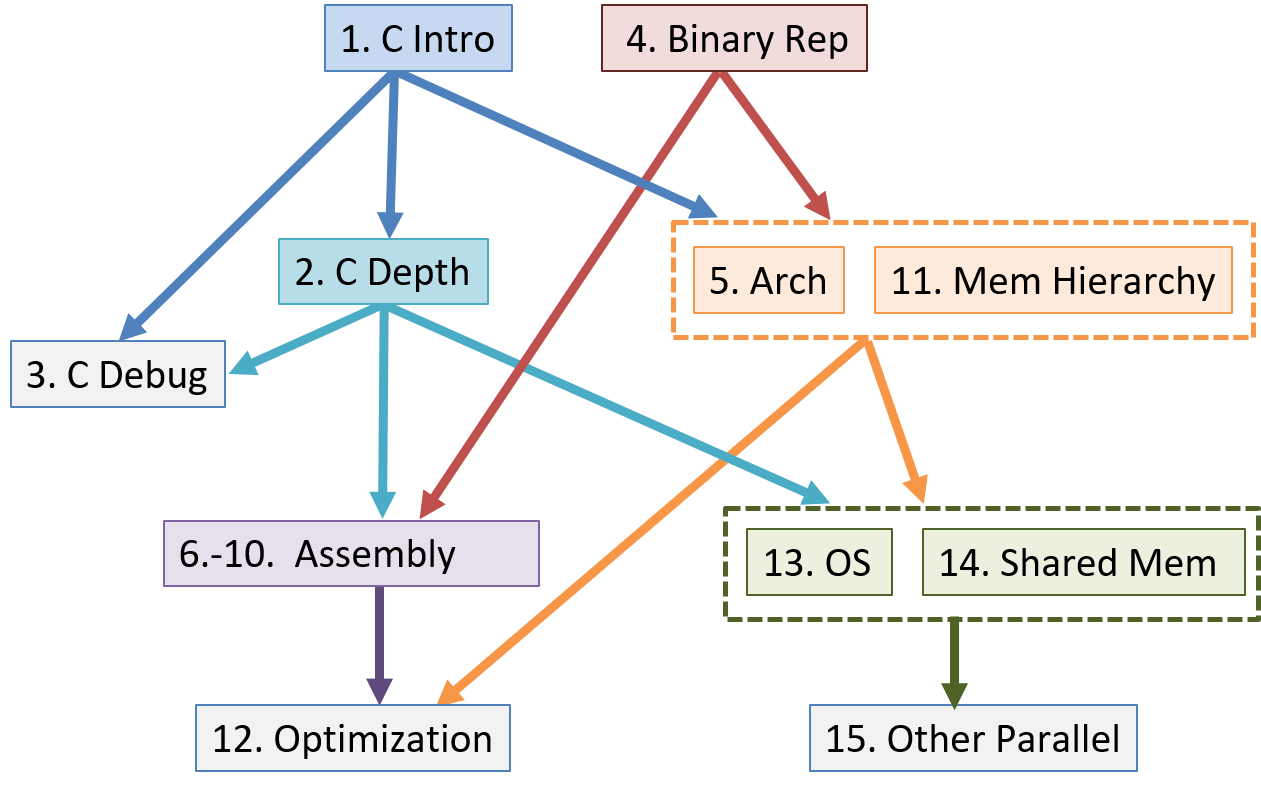

Although as a whole the book provides a vertical slice through the computer, the book chapters are written as independently as possible so that an instructor can mix and match chapters for their particular needs. The chapter dependency graph is shown below, though individual sections within chapters may not have as deep a dependency hierarchy as the entire chapter.

Summary of Chapter Topics

-

Chapter 0, Introduction: Introduction to computer systems and some tips for reading this book.

-

Chapter 1, Introduction to C Programming: Covers C programming basics, including compiling and running C programs. We assume readers of this book have had an introduction to programming in some programming language. We compare example C syntax to Python syntax so that readers familiar with Python can see how they may translate. However, Python programming experience is not necessary for reading or understanding this chapter.

-

Chapter 2, A Deeper Dive into C: Covers most of the C language, notably pointers and dynamic memory. We also elaborate on topics from Chapter 1 in more detail and discuss some advanced C features.

-

Chapter 3, C Debugging Tools: Covers common C debugging tools (GDB and Valgrind) and illustrates how they can be used to debug a variety of applications.

-

Chapter 4, Binary and Data Representation: Covers encoding data into binary, binary representation of C types, arithmetic operations on binary data, and arithmetic overflow.

-

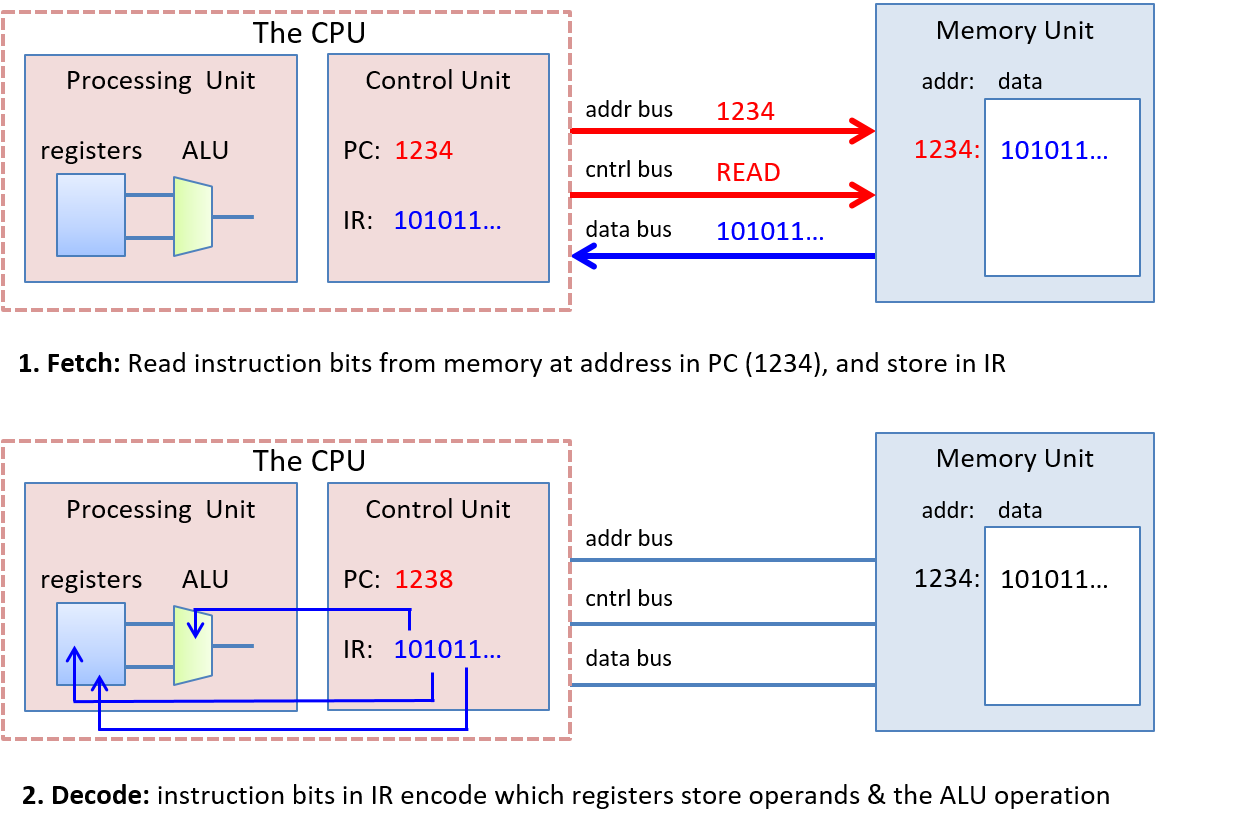

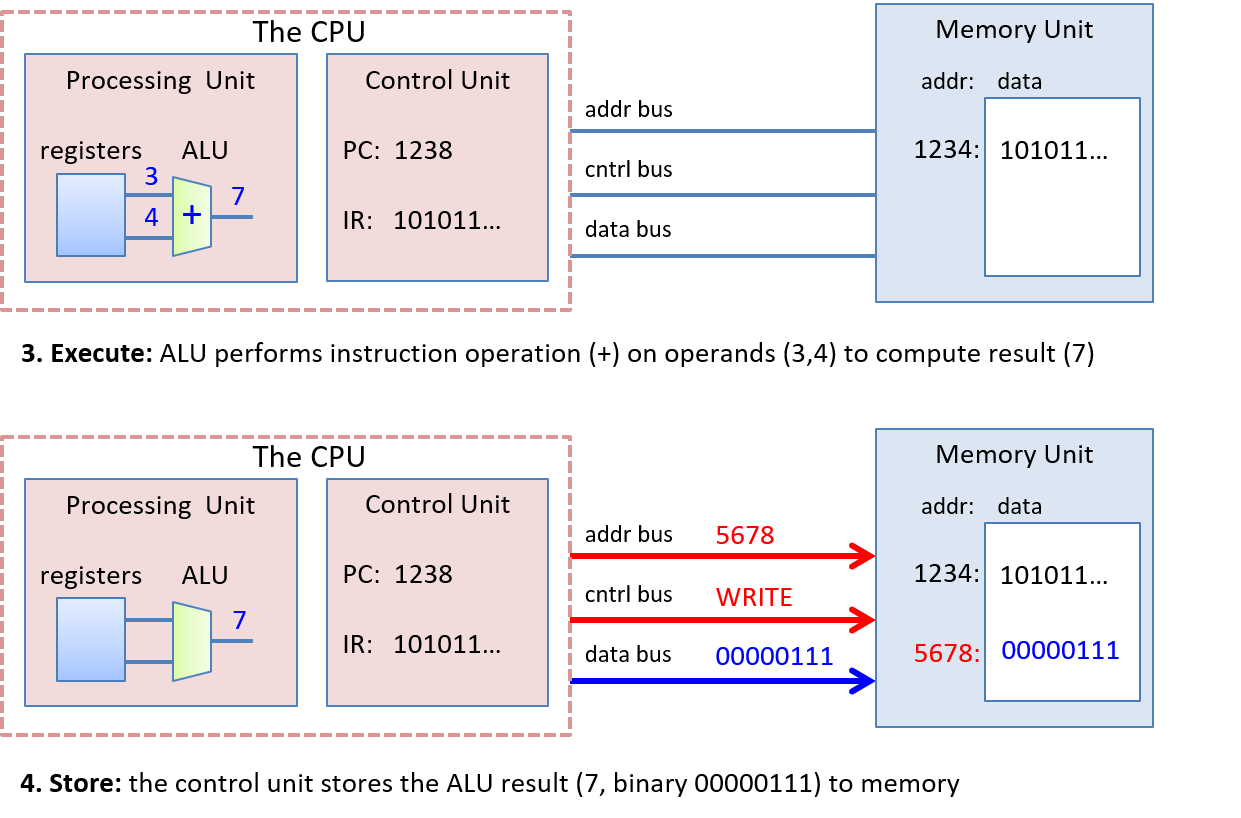

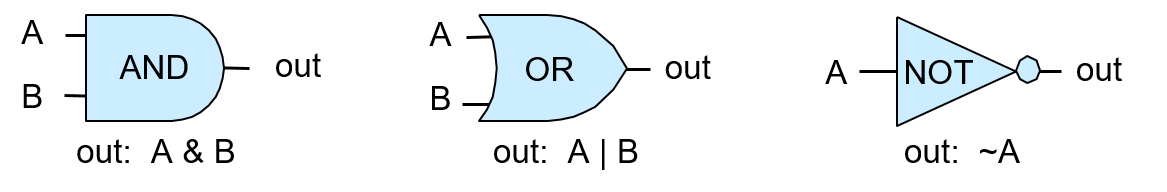

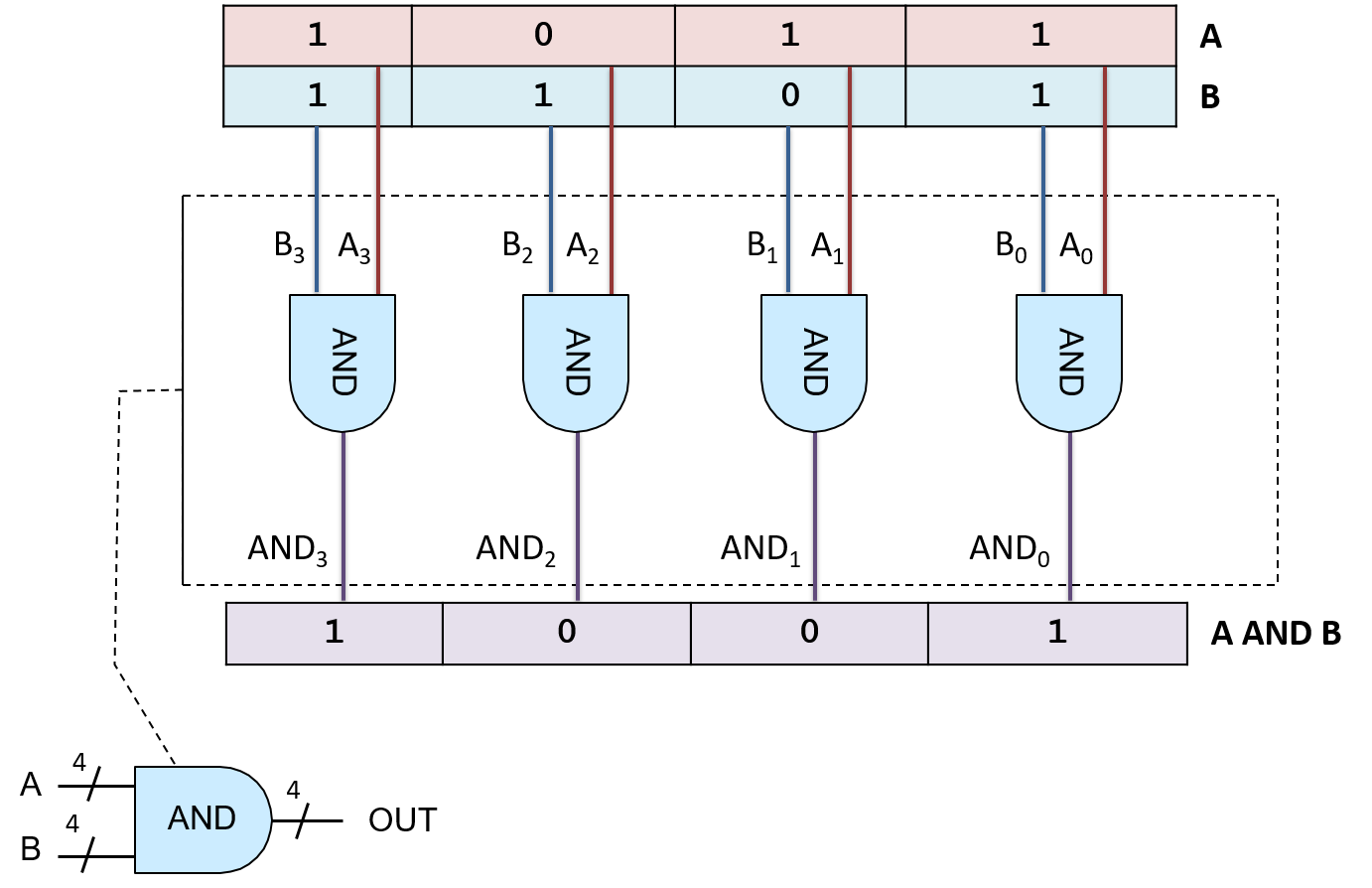

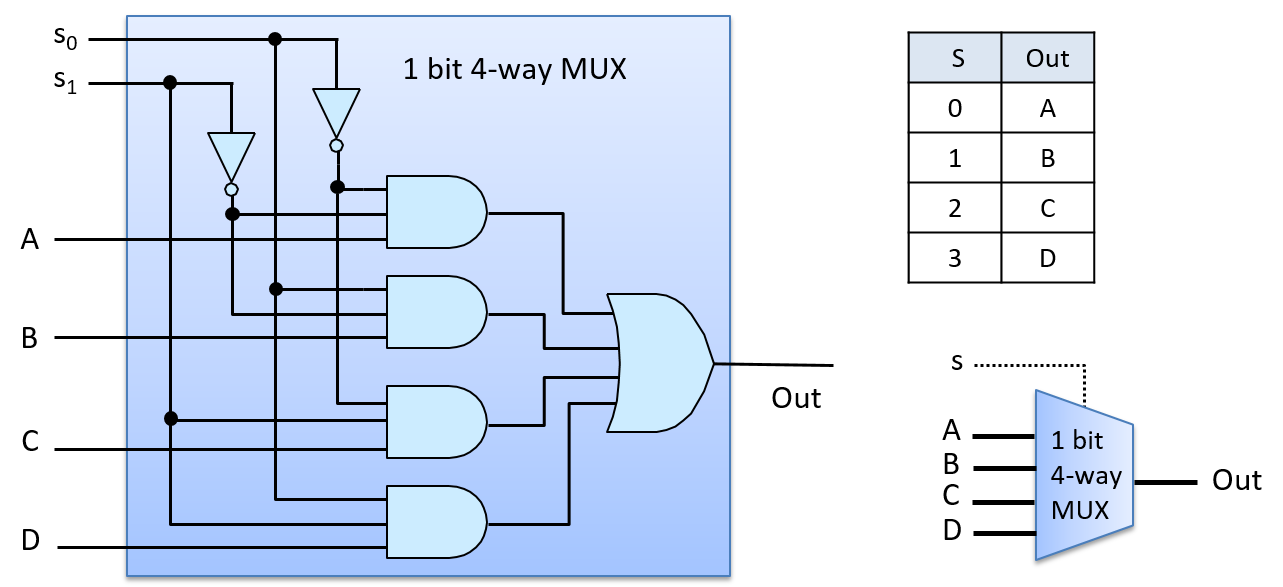

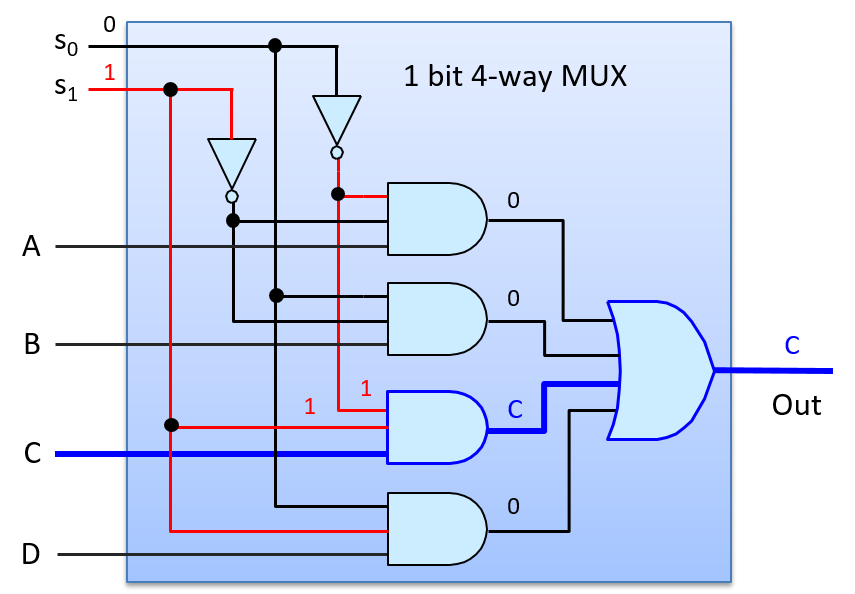

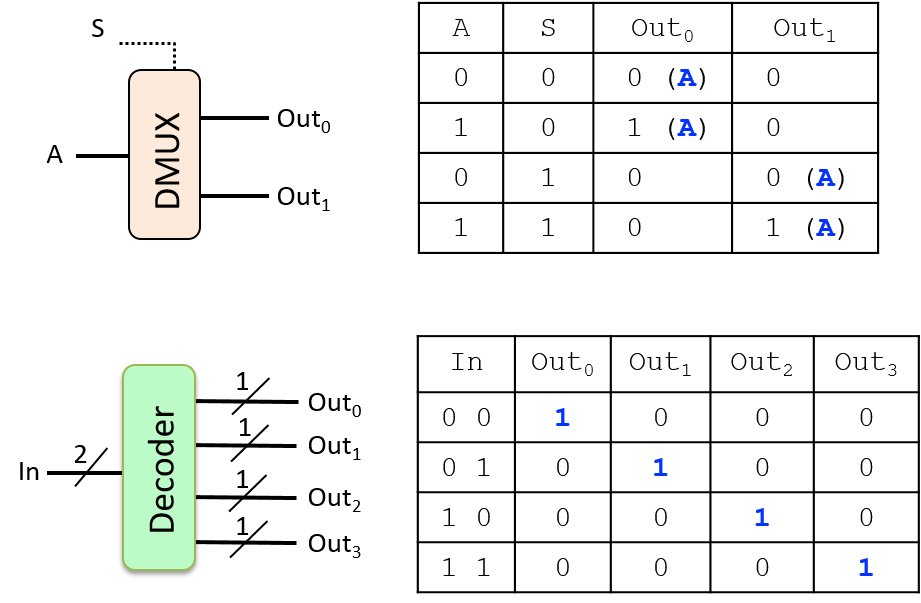

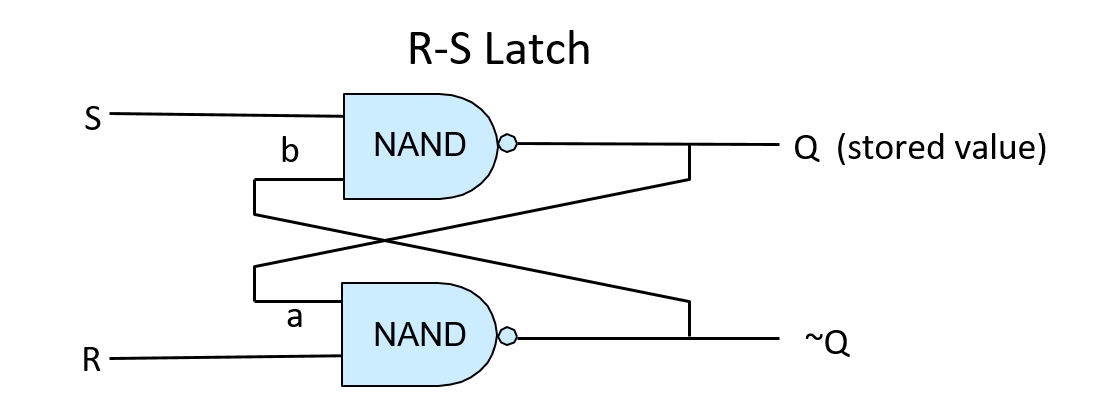

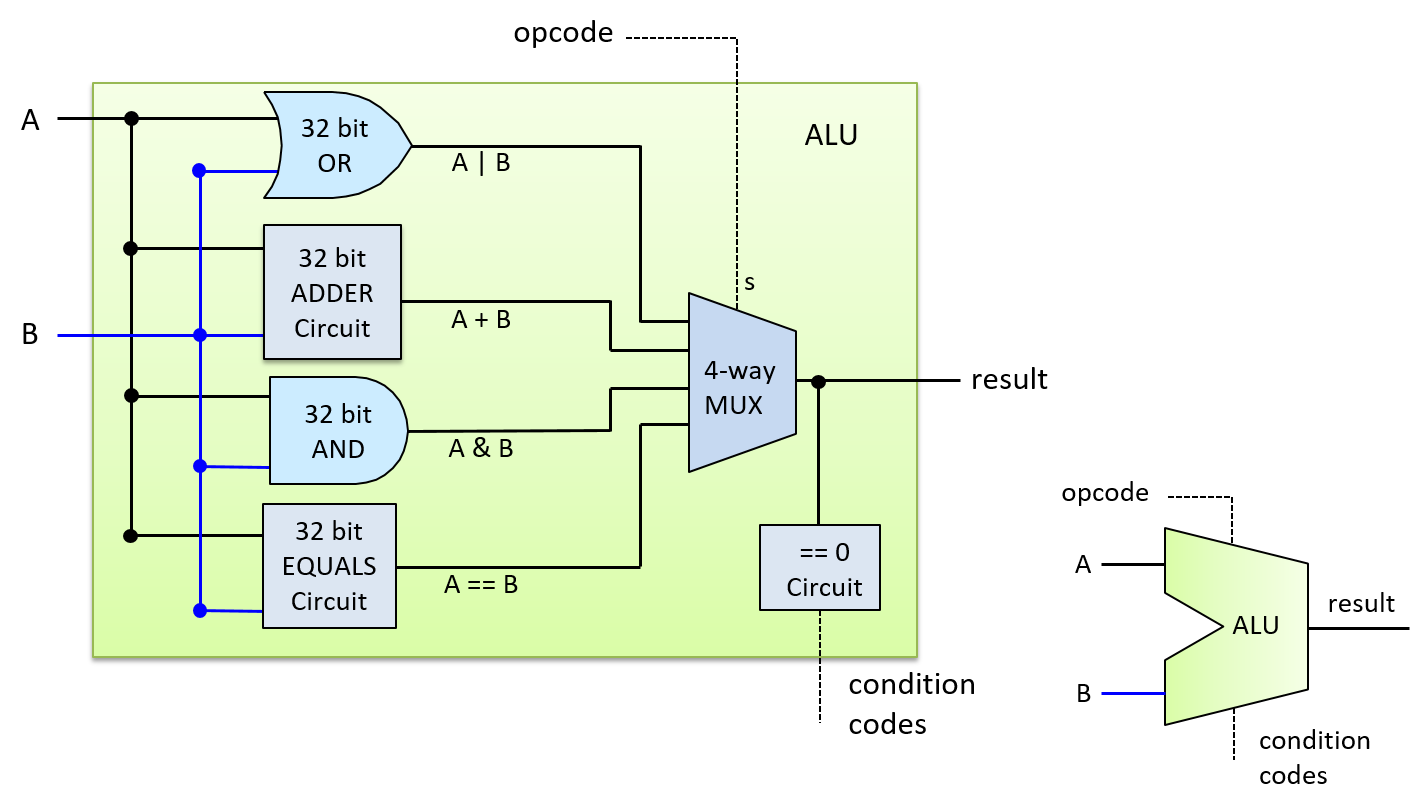

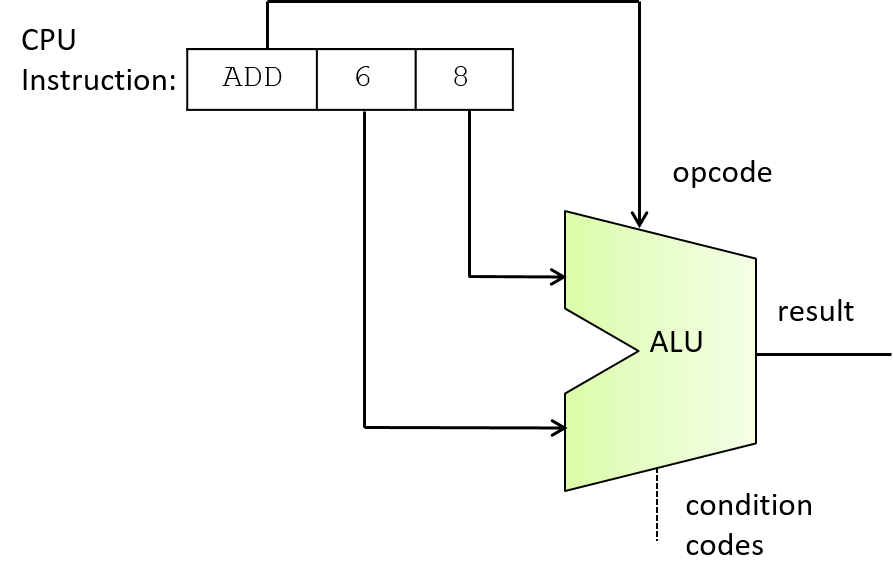

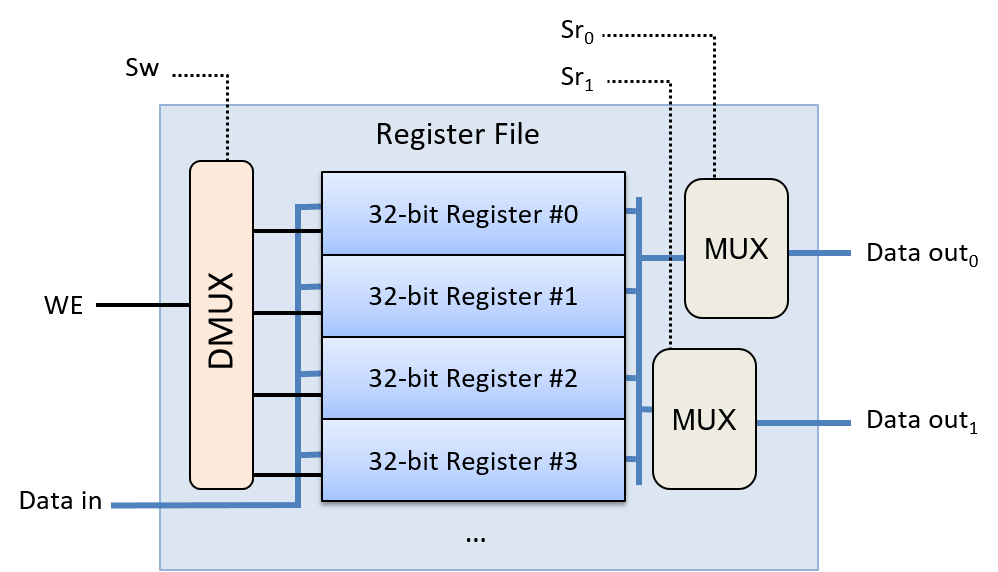

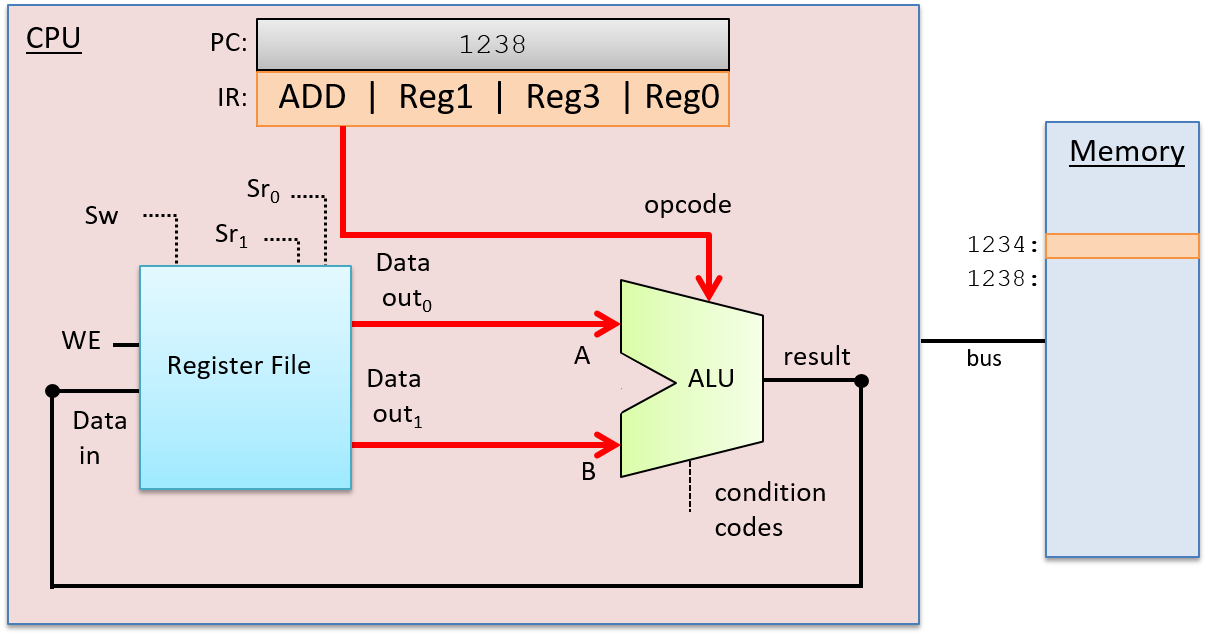

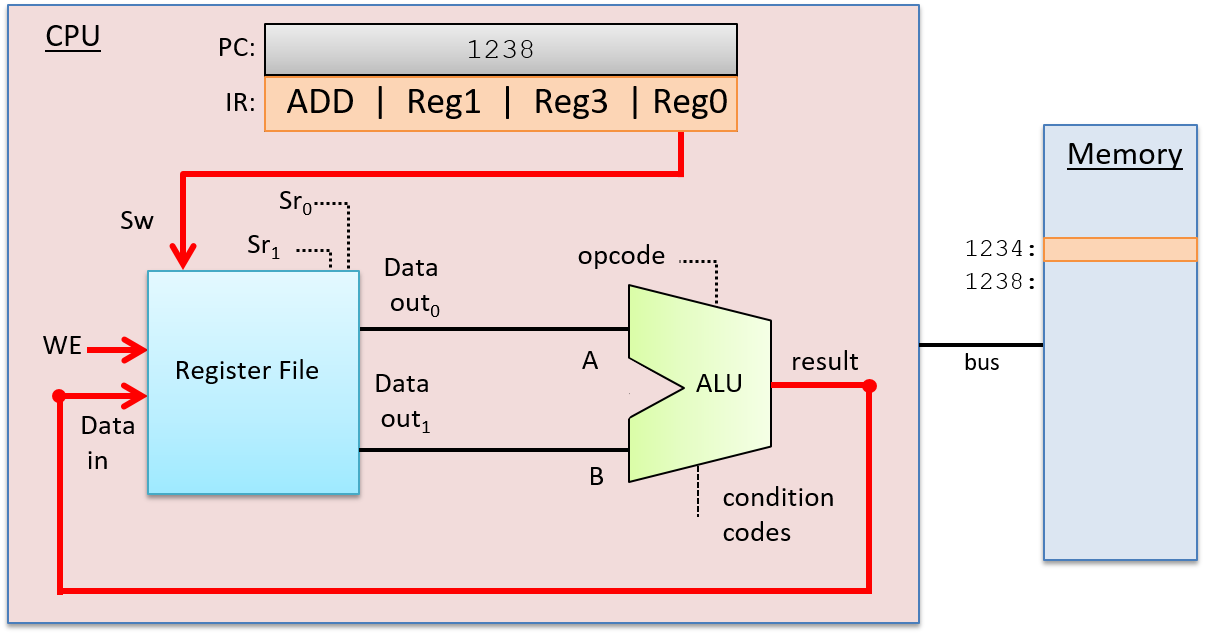

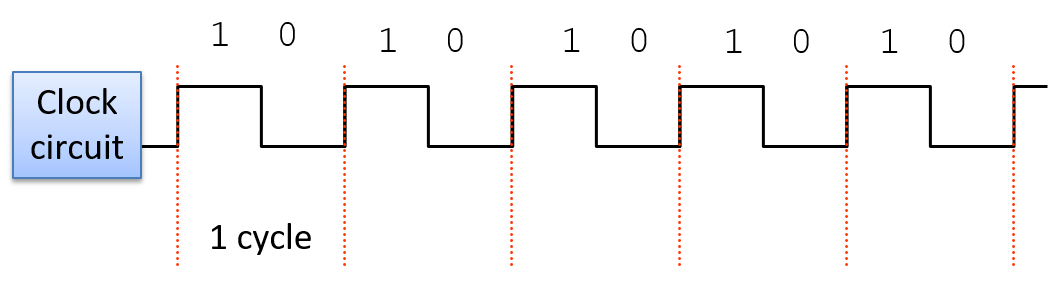

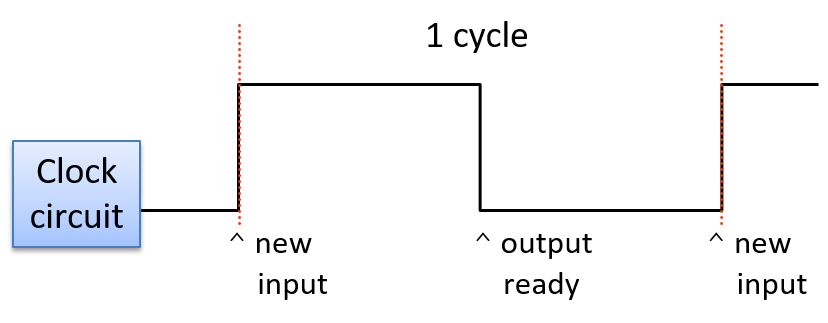

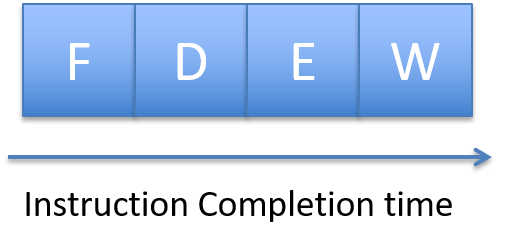

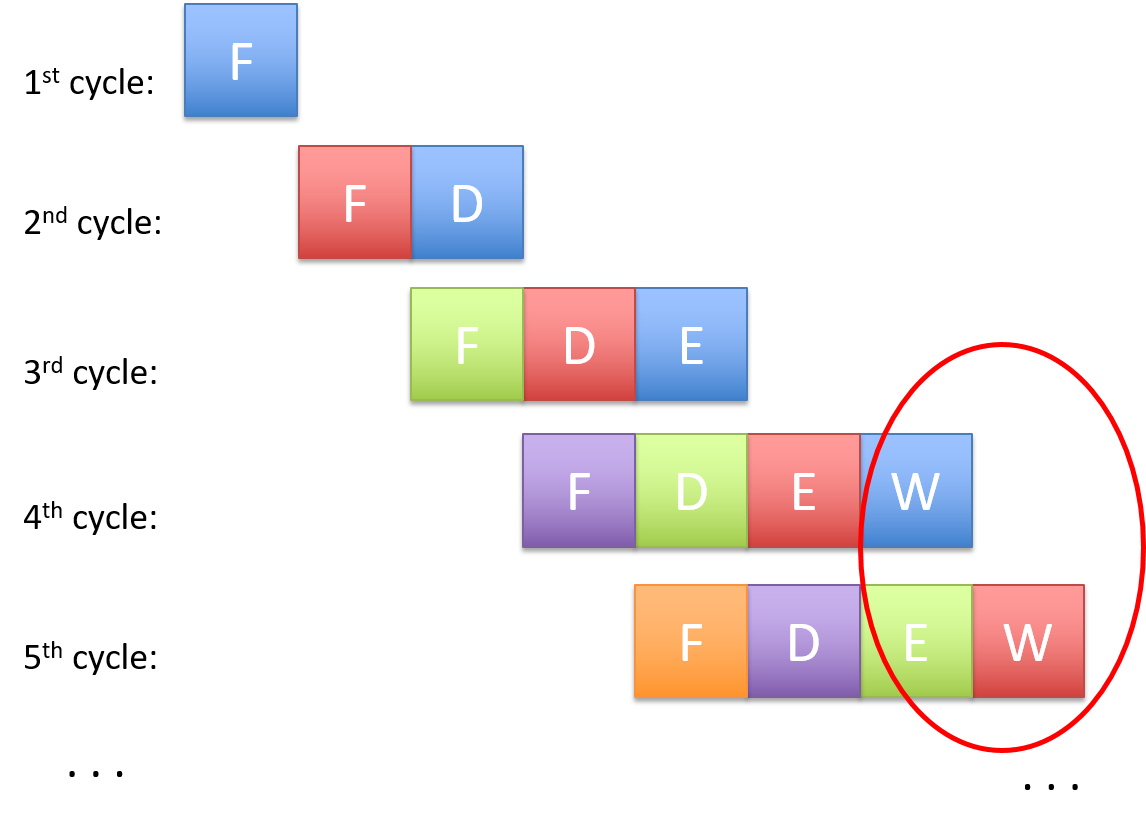

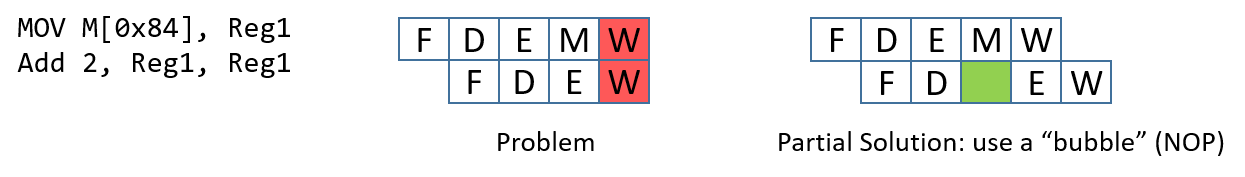

Chapter 5, Gates, Circuits, and Computer Architecture: Covers the von Neumann architecture from logic gates to the construction of a basic CPU. We characterize clock-driven execution and the stages of instruction execution though arithmetic, storage, and control circuits. We also briefly introduce pipelining, some modern architecture features, and a short history of computer architecture.

-

Chapters 6-10, Assembly Programming: Covers translating C into assembly code from basic arithmetic expressions to functions, the stack, and array and

structaccess. In three separate chapters we cover assembly from three different instruction set architectures: 32-bit x86, 64-bit x86, and 64-bit ARM. -

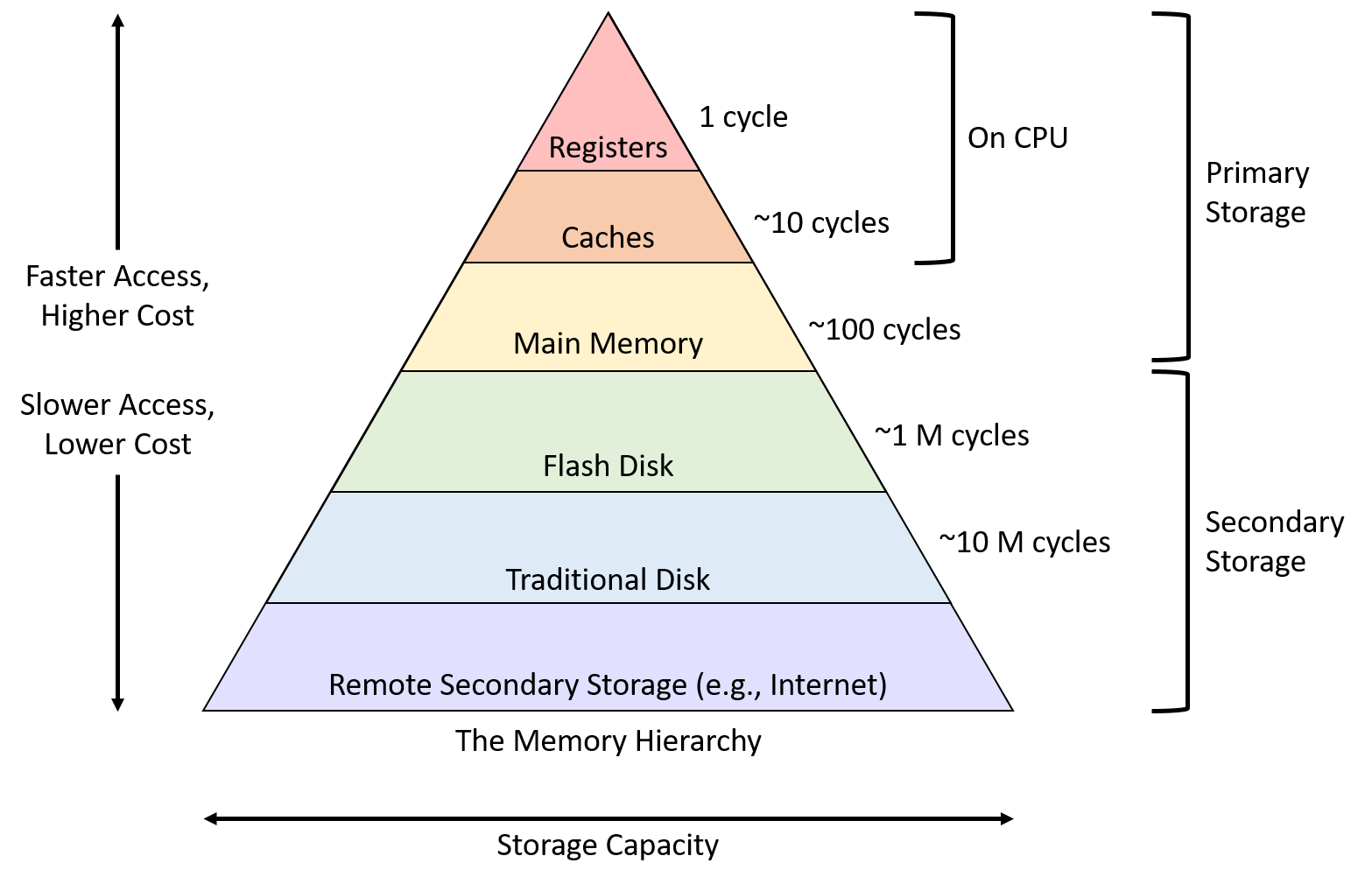

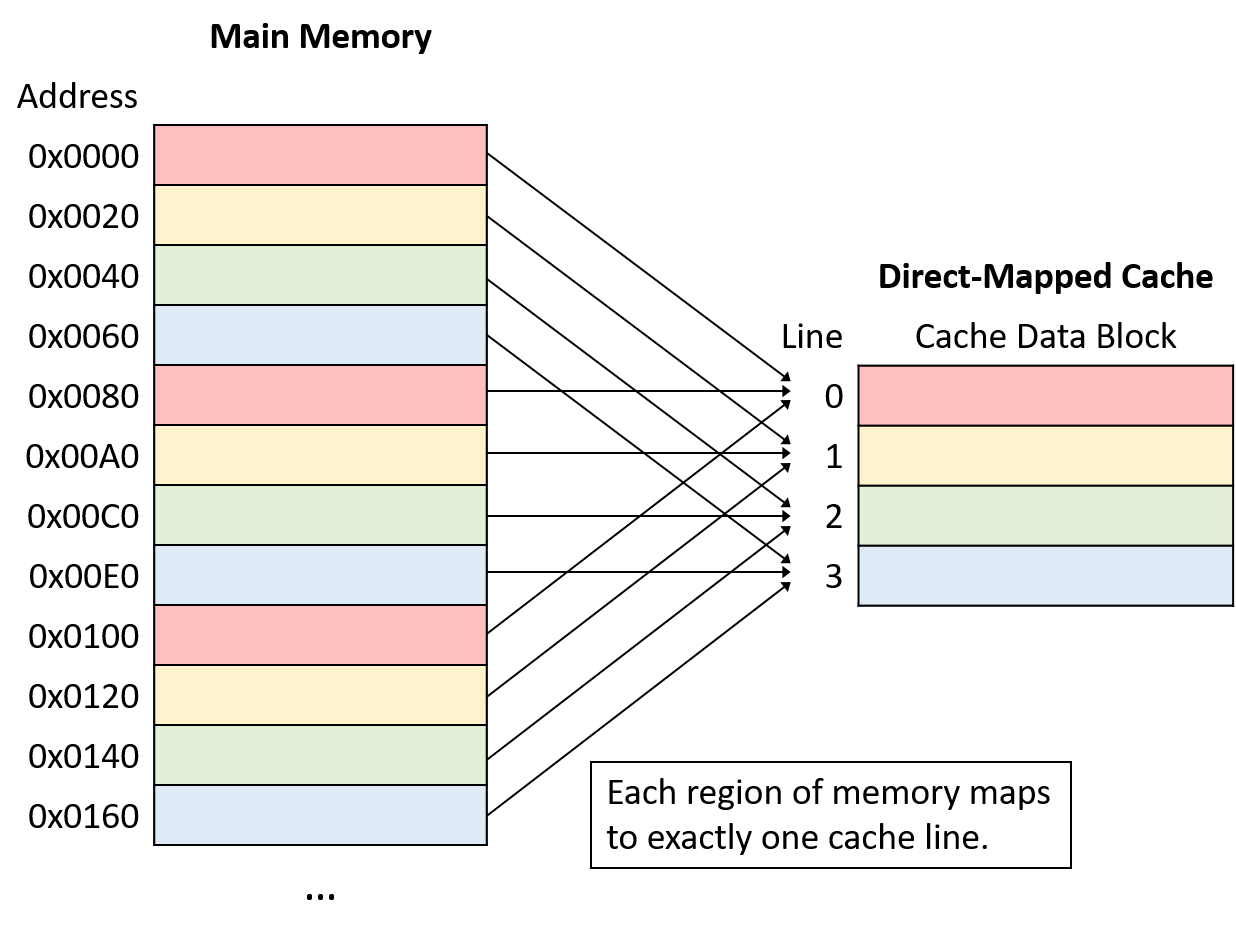

Chapter 11, Storage and the Memory Hierarchy: Covers storage devices, the memory hierarchy and its effects on program performance, locality, caching, and the Cachegrind profiling tool.

-

Chapter 12, Code Optimization: Covers compiler optimizations, designing programs with performance in mind, tips for code optimization, and quantitatively measuring a program’s performance.

-

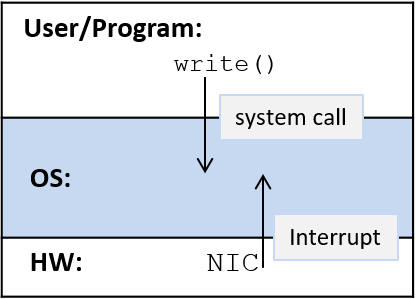

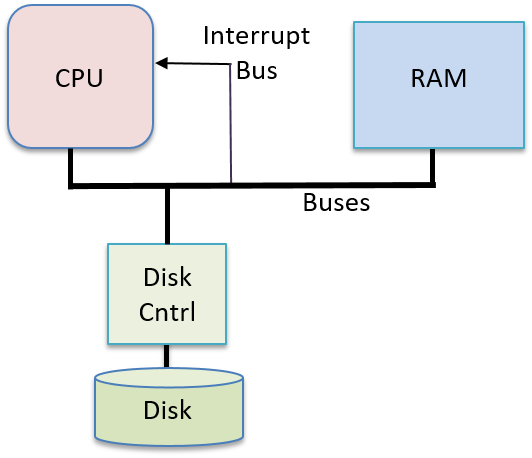

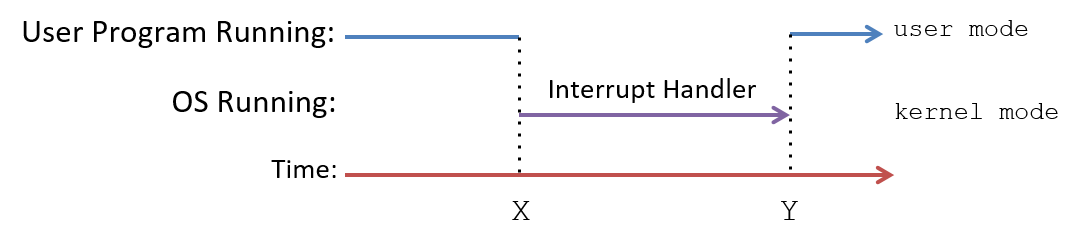

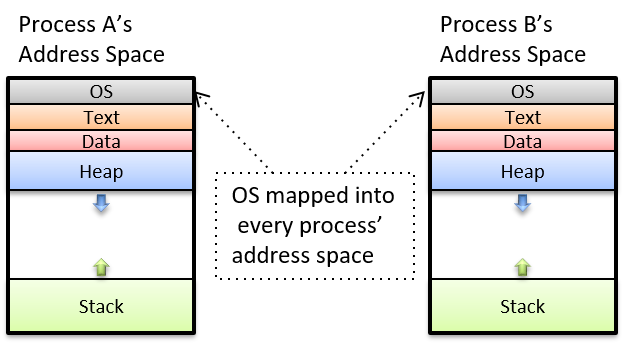

Chapter 13, Operating Systems: Covers core operating system abstractions and the mechanisms behind them. We primarily focus on processes, virtual memory, and interprocess communication (IPC).

-

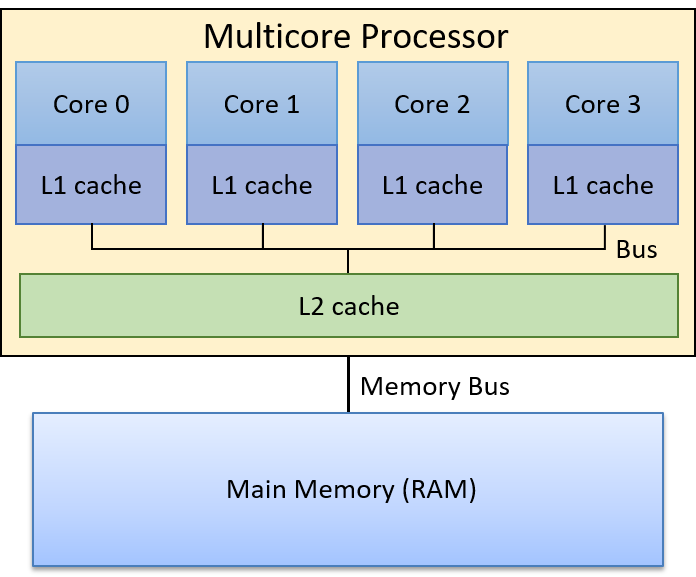

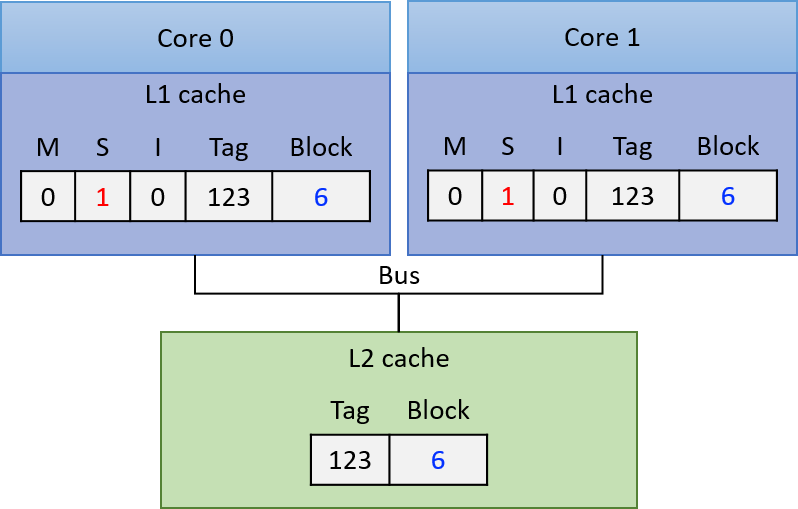

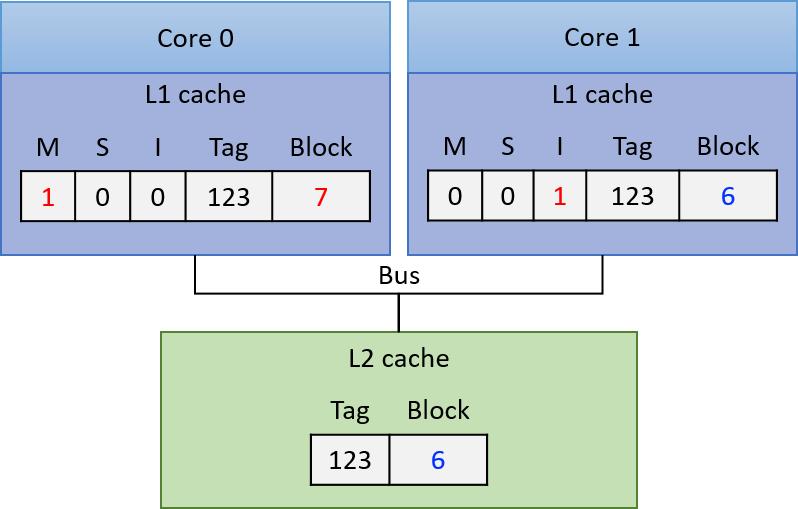

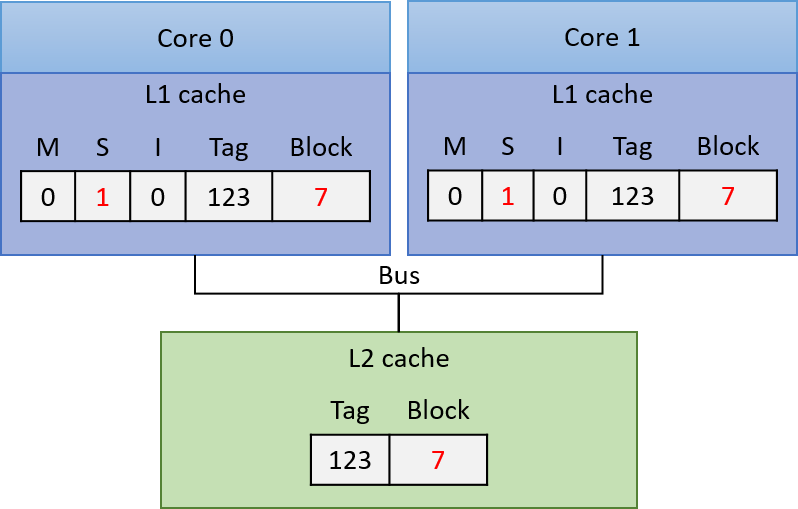

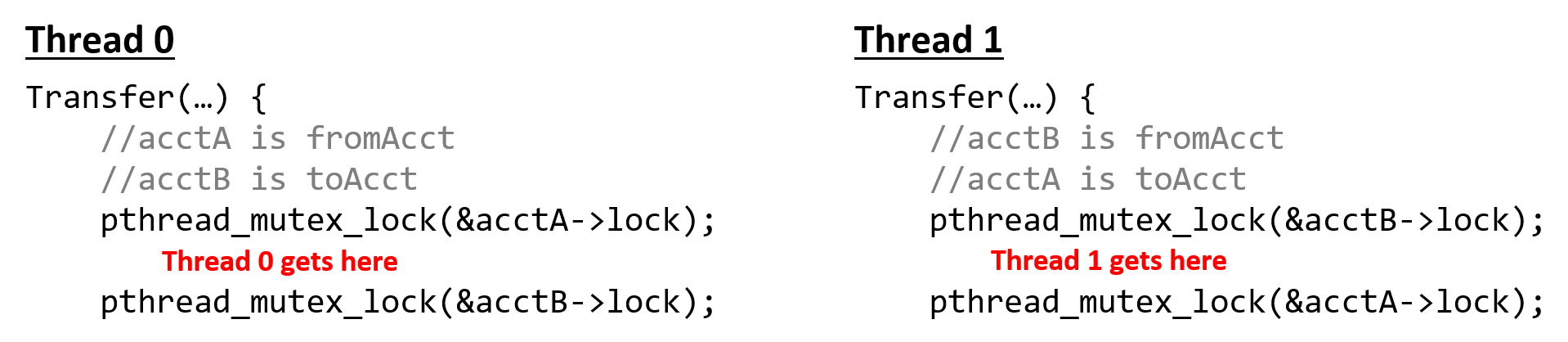

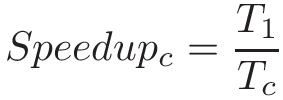

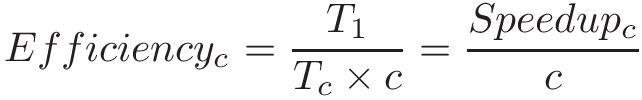

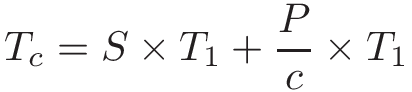

Chapter 14, Shared Memory Parallelism: Covers multicore processors, threads and Pthreads programming, synchronization, race conditions, and deadlock. This chapter includes some advanced topics on measuring parallel performance (speed-up, efficiency, Amdahl’s law), thread safety, and cache coherence.

-

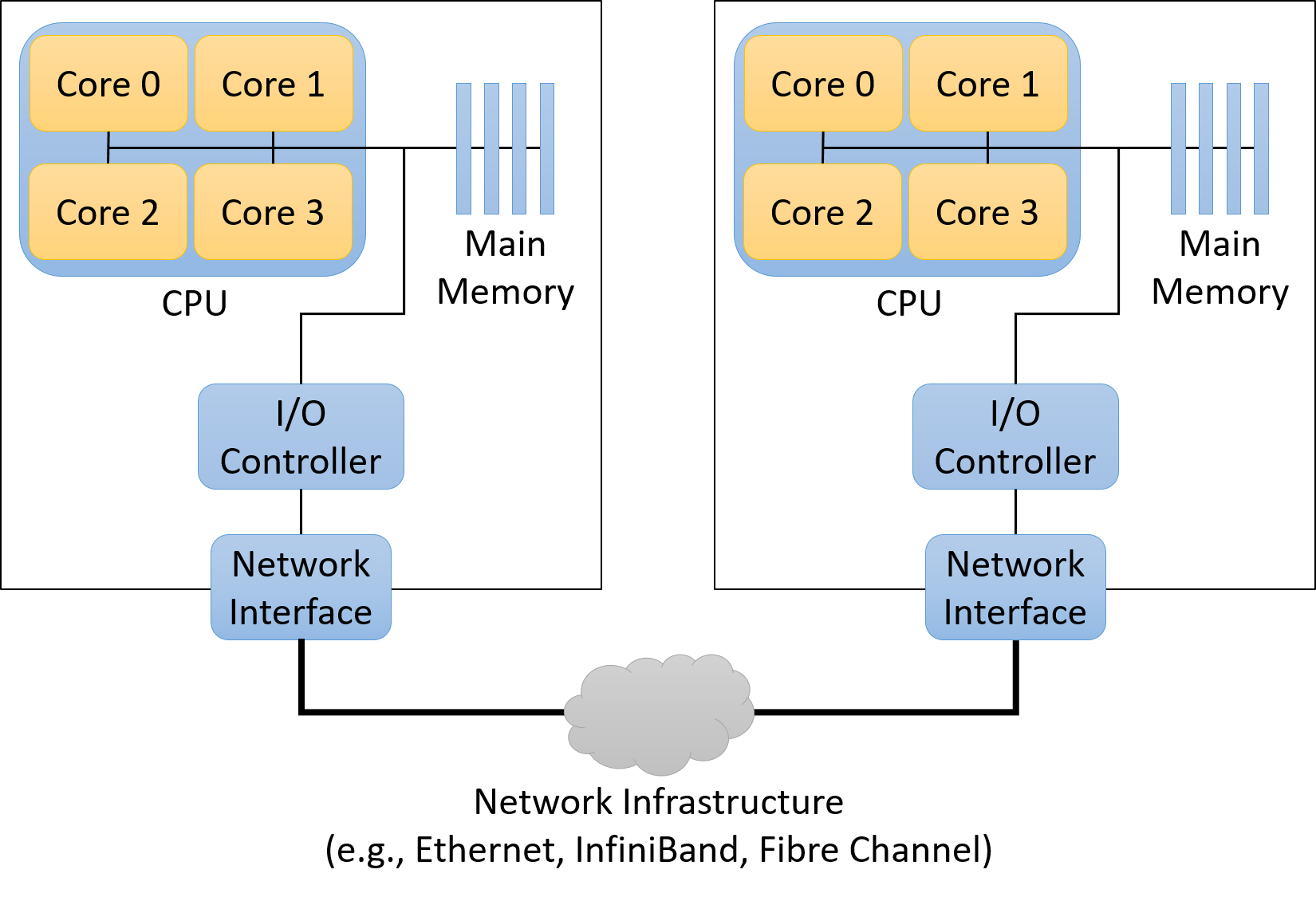

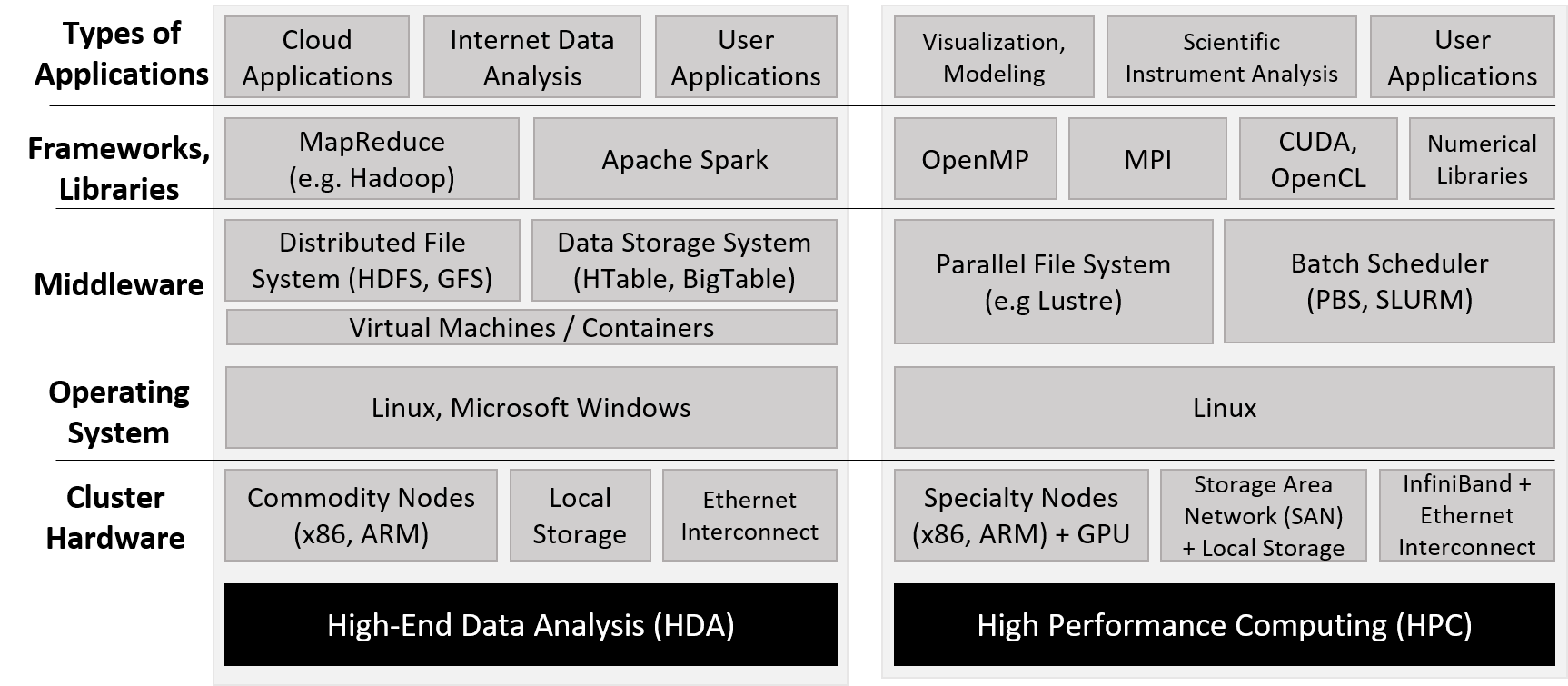

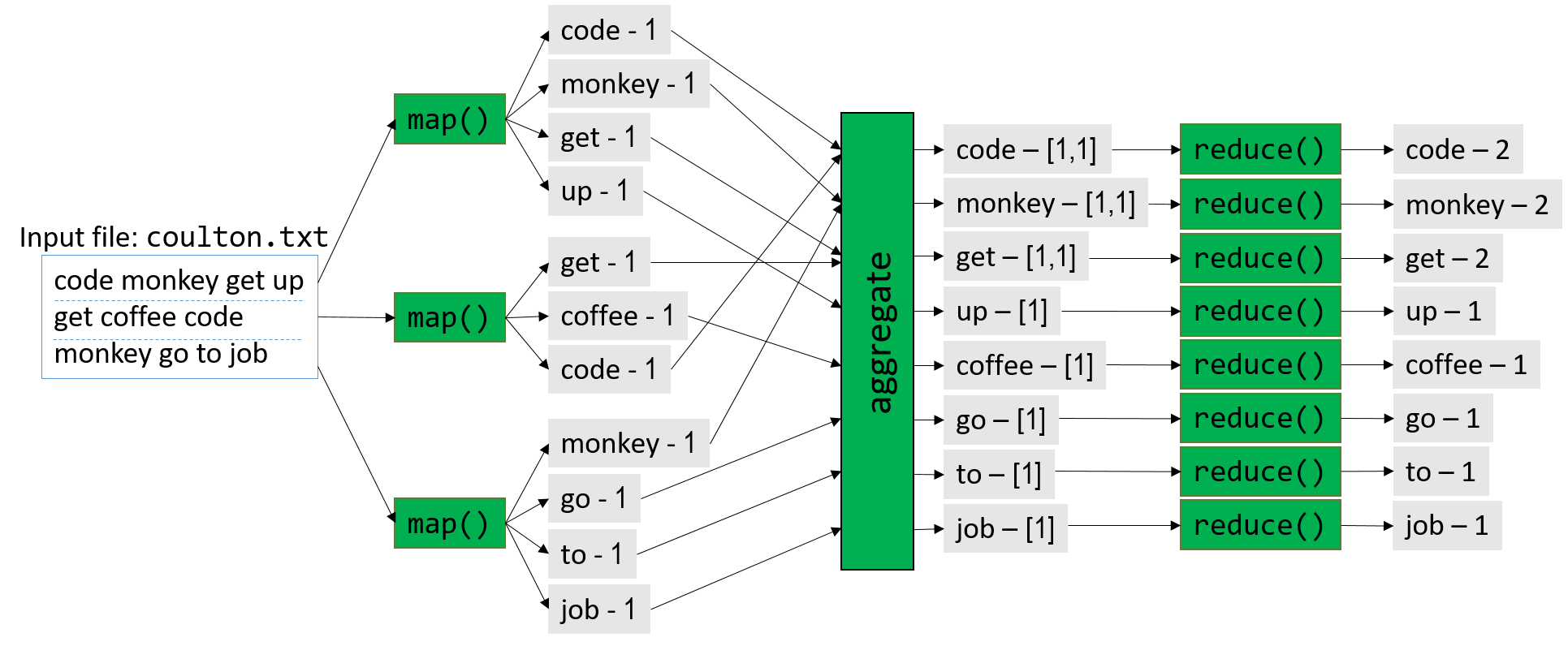

Chapter 15, Advanced Parallel Systems and Programming Models: Introduces the basics of distributed memory systems and the Message Passing Interface (MPI), hardware accelerators and CUDA, and cloud computing and MapReduce.

Example Uses of This Book

Dive into Systems can be used as a primary textbook for courses that introduce computer systems topics, or individual chapters can be used to provide background information in courses that cover topics in more depth.

As examples from the authors' two institutions, we have been using it as the primary textbook for two different intermediate-level courses:

-

Introduction To Computer Systems at Swarthmore College. Chapter ordering: 4, 1 (some 3), 5, 6, 7, 10, 2 (more 3), 11, 13, 14.

-

Computer Organization at West Point. Chapter ordering: 1, 4, 2 (some 3), 6, 7, 10, 11, 12, 13, 14, 15.

Additionally, we use individual chapters as background reading in many of our upper-level courses, including:

| Upper-level Course Topic | Chapters for Background Readings |

|---|---|

Architecture |

5, 11 |

Compilers |

6, 7, 8, 9, 10, 11, 12 |

Database Systems |

11, 14, 15 |

Networking |

4, 13, 14 |

Operating Systems |

11, 13, 14 |

Parallel and Distributed Systems |

11, 13, 14, 15 |

Finally, Chapters 2 and 3 are used as C programming and debugging references in many of our courses.

Available Online

The free online version of our textbook is available at https://diveintosystems.org/.

0. Introduction

Dive into the fabulous world of computer systems! Understanding what a computer system is and how it runs your programs can help you to design code that runs efficiently and that can make the best use of the power of the underlying system. In this book, we take you on a journey through computer systems. You will learn how your program written in a high-level programming language (we use C) executes on a computer. You will learn how program instructions translate into binary and how circuits execute their binary encoding. You will learn how an operating system manages programs running on the system. You will learn how to write programs that can make use of multicore computers. Throughout, you will learn how to evaluate the systems costs associated with program code and how to design programs to run efficiently.

What Is a Computer System?

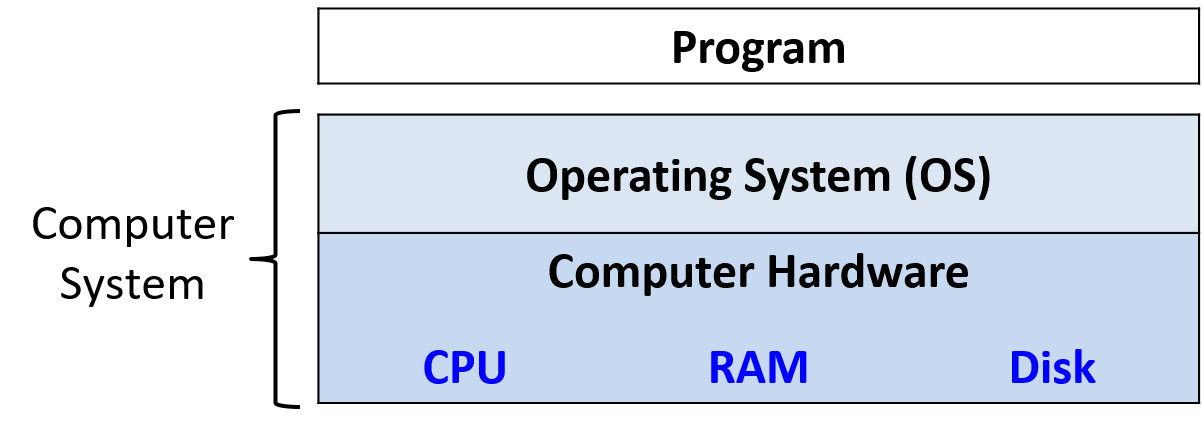

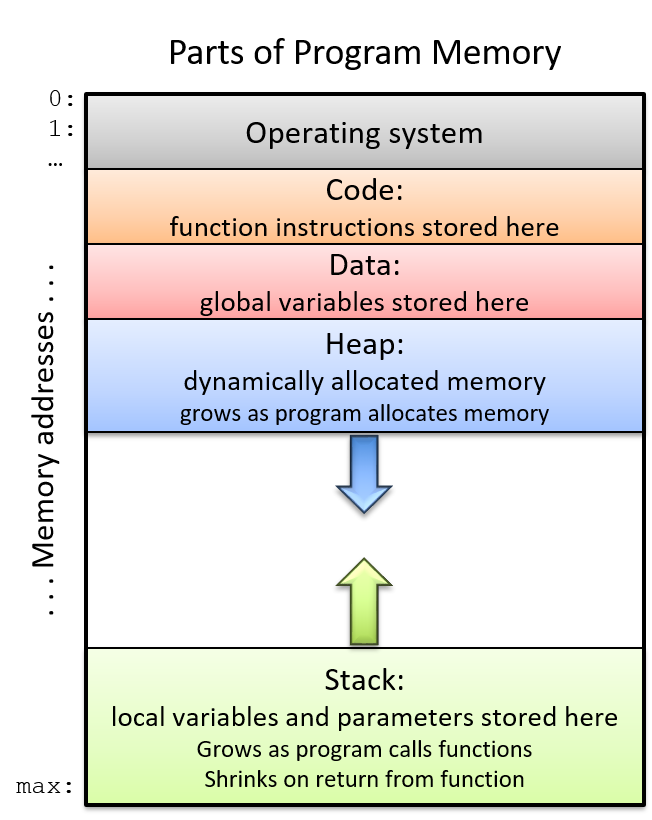

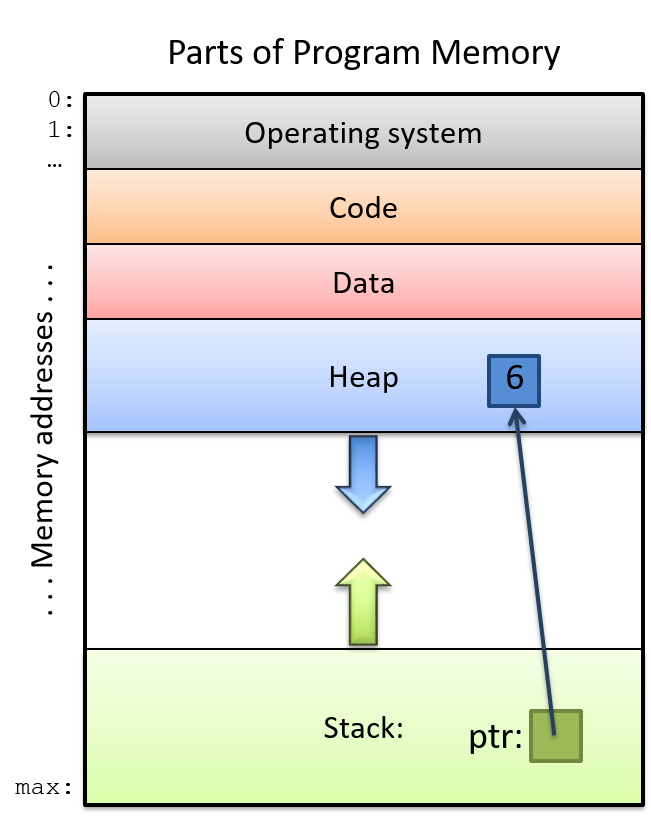

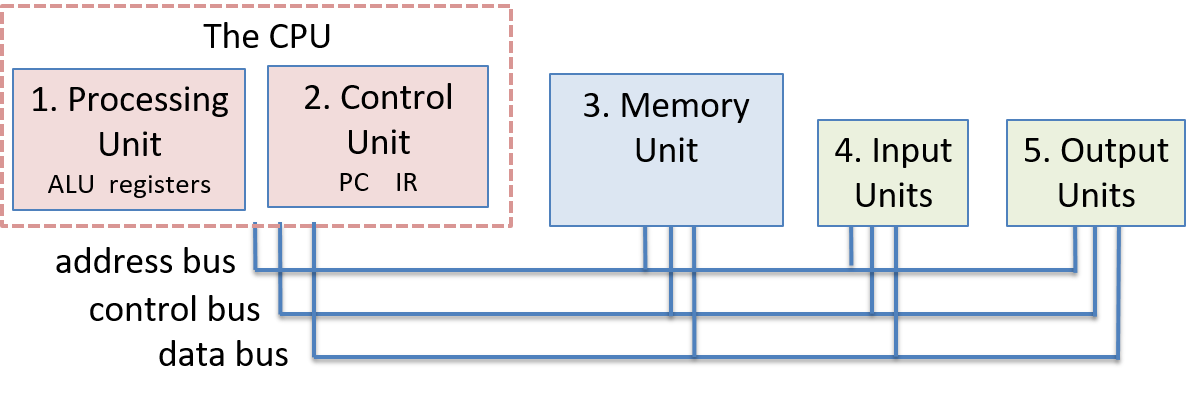

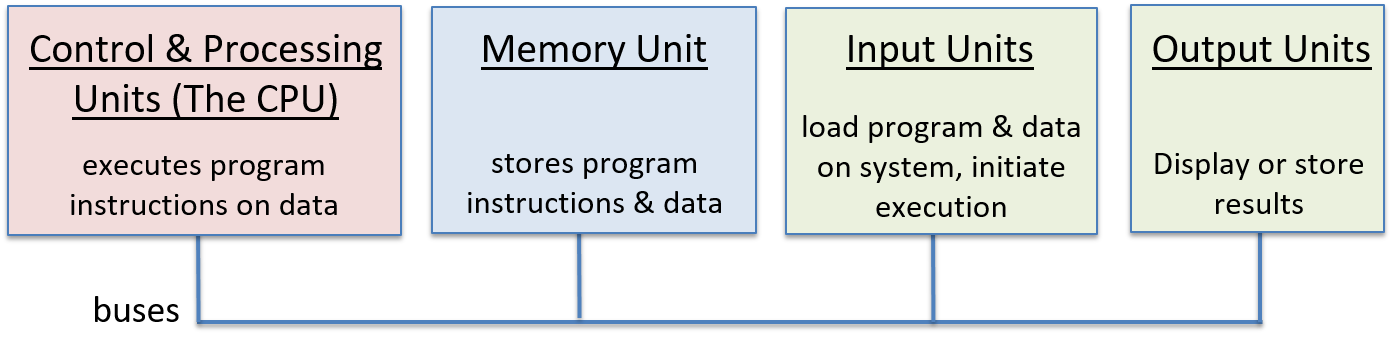

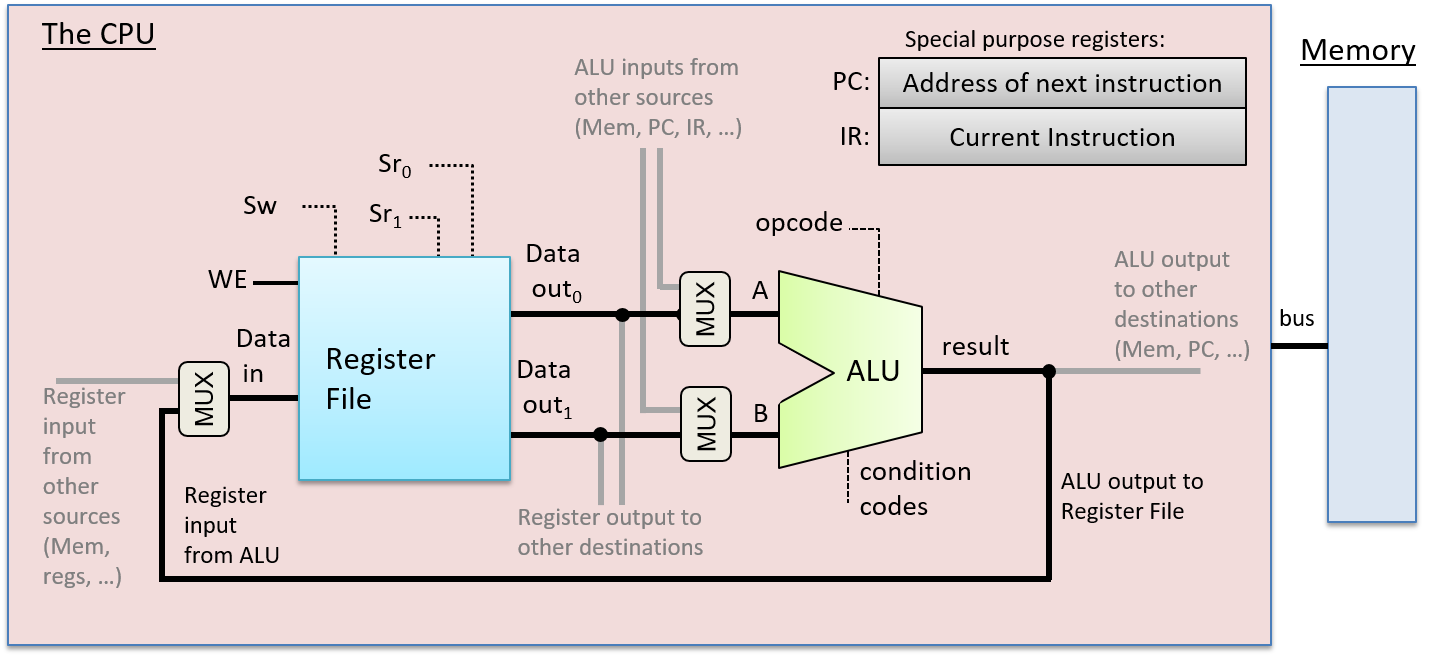

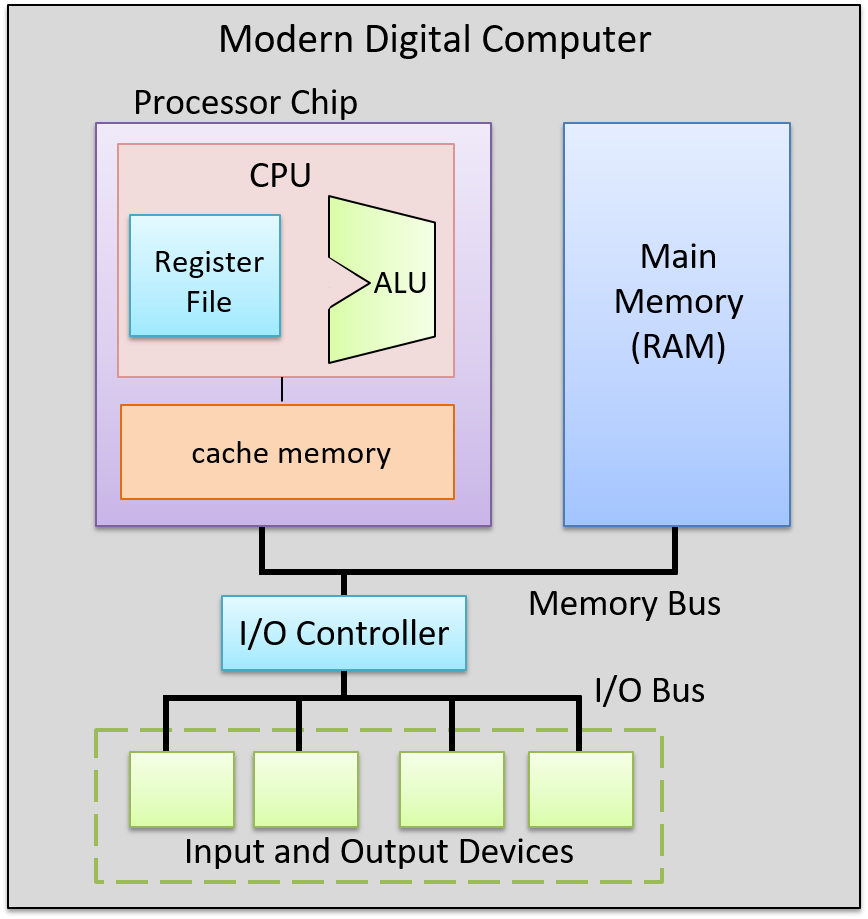

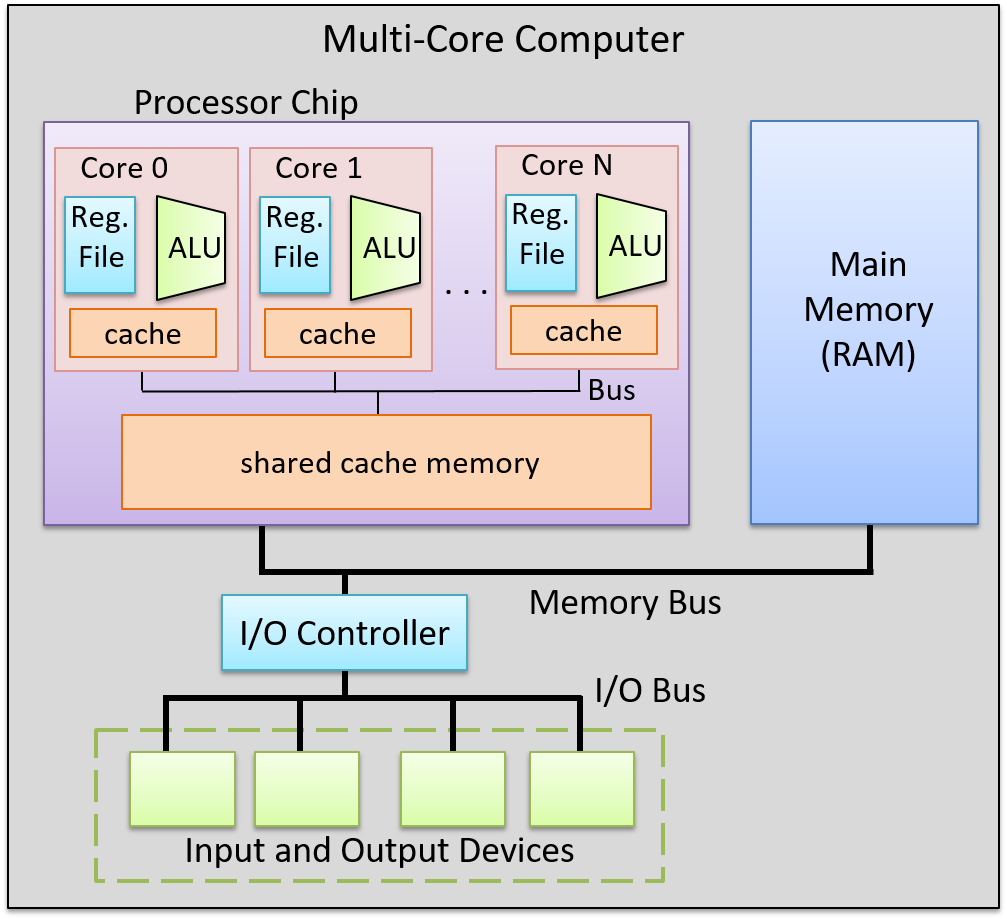

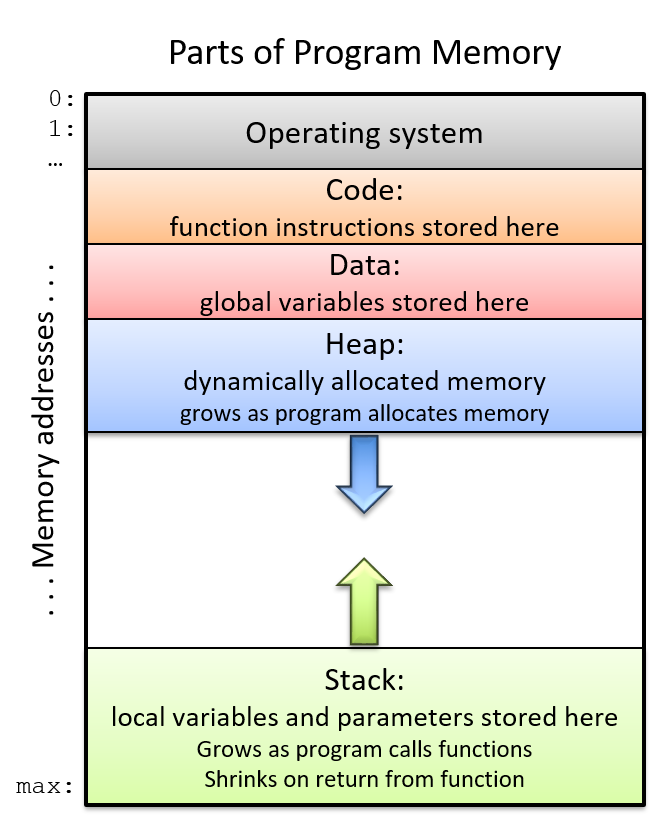

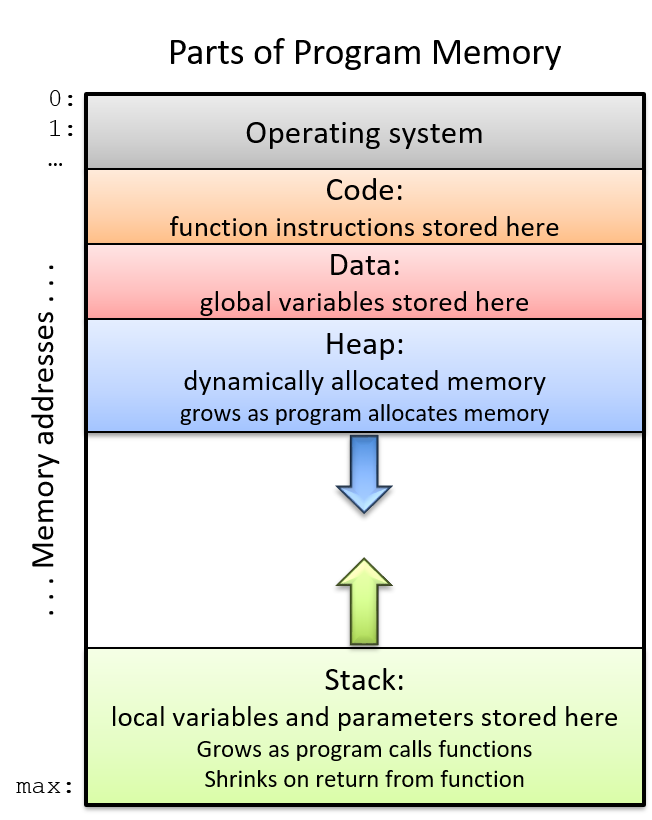

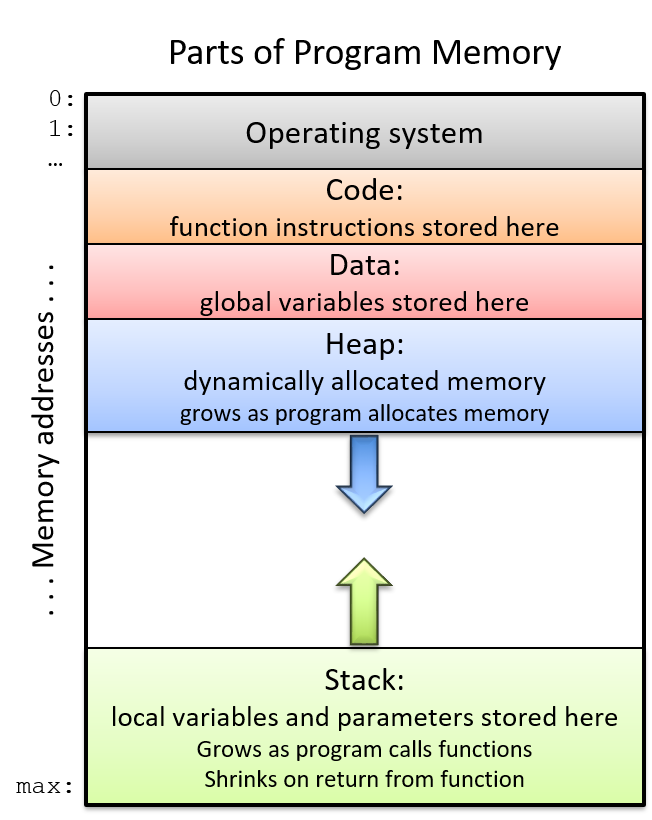

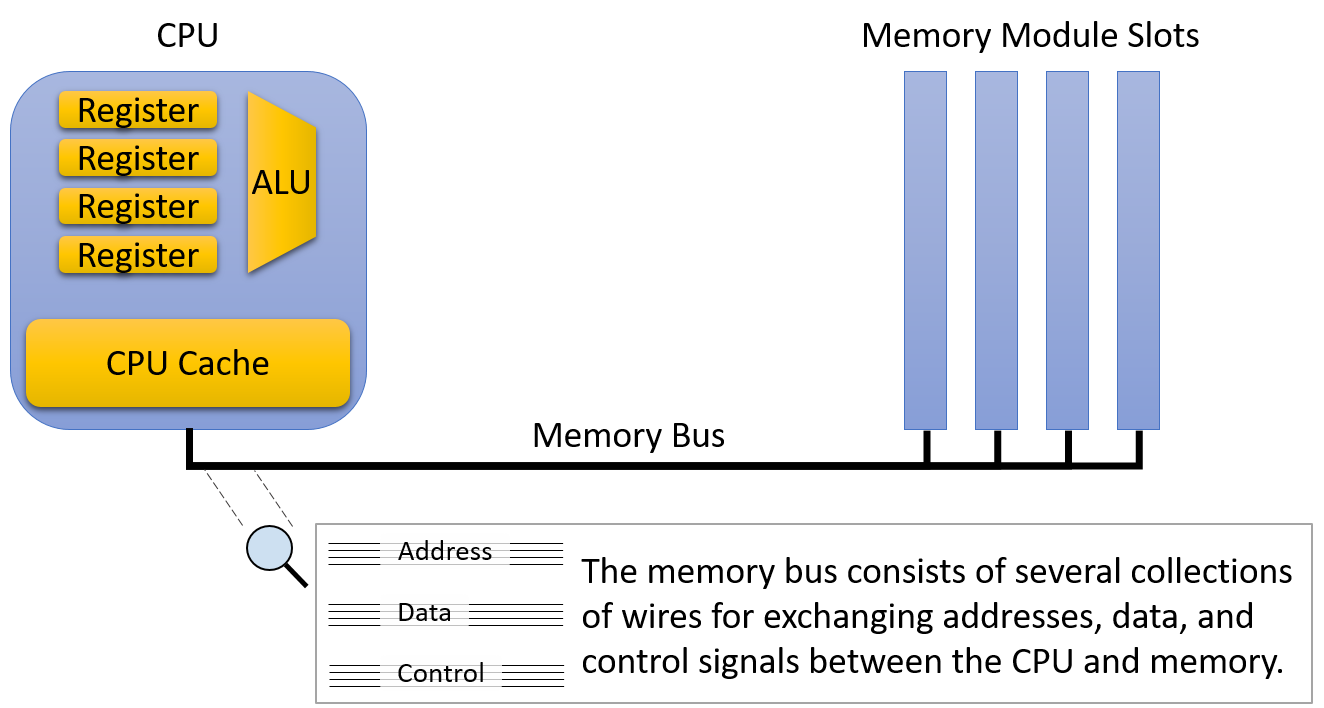

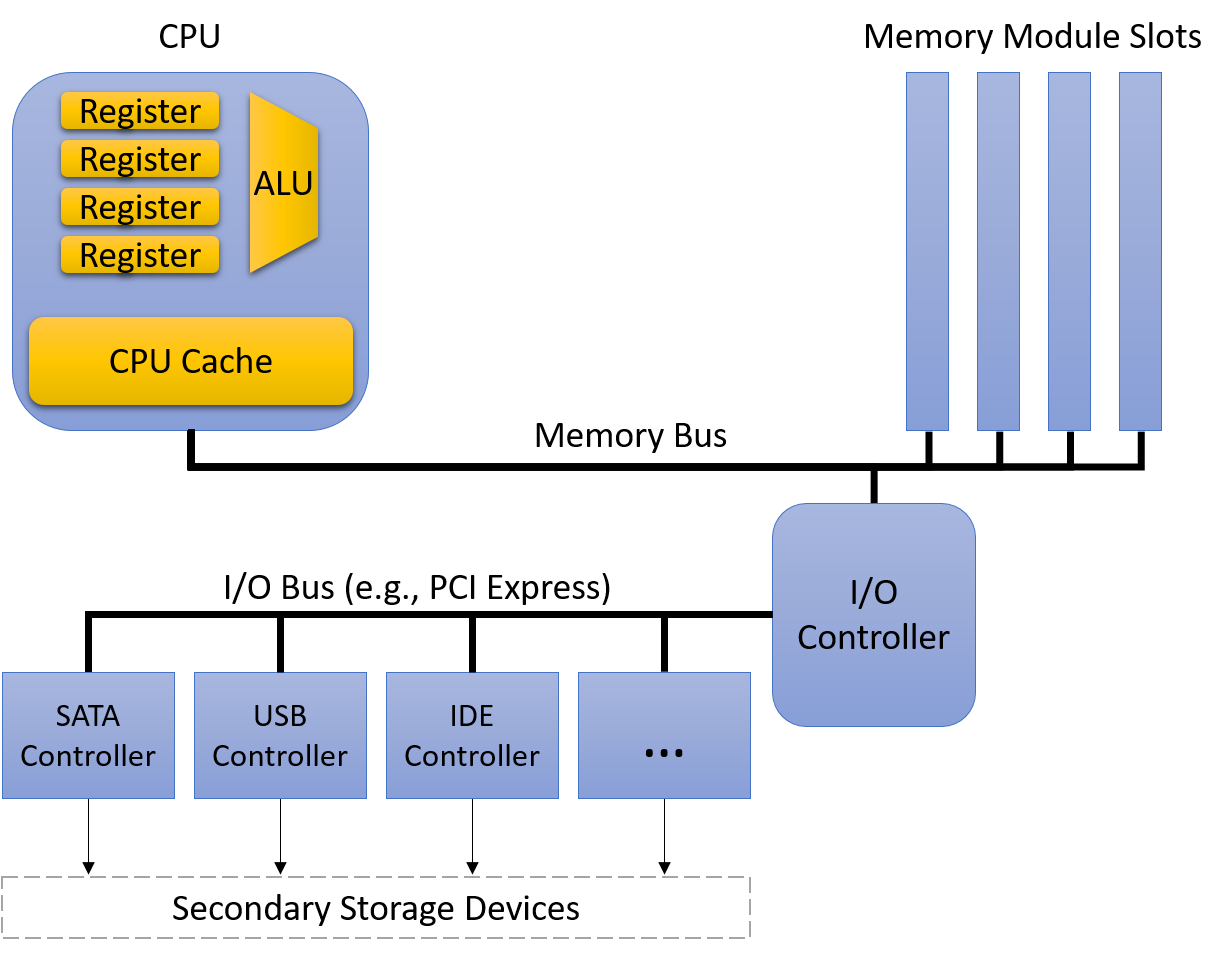

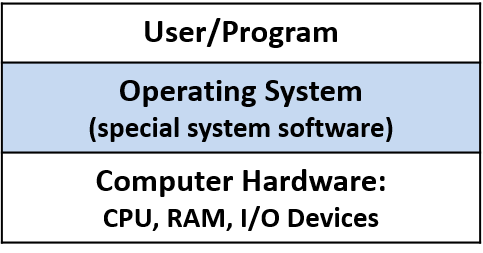

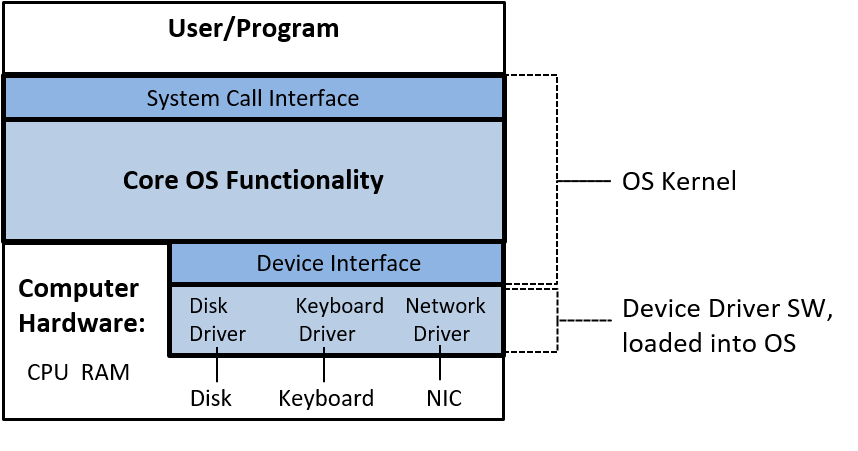

A computer system combines the computer hardware and special system software that together make the computer usable by users and programs. Specifically, a computer system has the following components (see Figure 1):

-

Input/output (IO) ports enable the computer to take information from its environment and display it back to the user in some meaningful way.

-

A central processing unit (CPU) runs instructions and computes data and memory addresses.

-

Random access memory (RAM) stores the data and instructions of running programs. The data and instructions in RAM are typically lost when the computer system loses power.

-

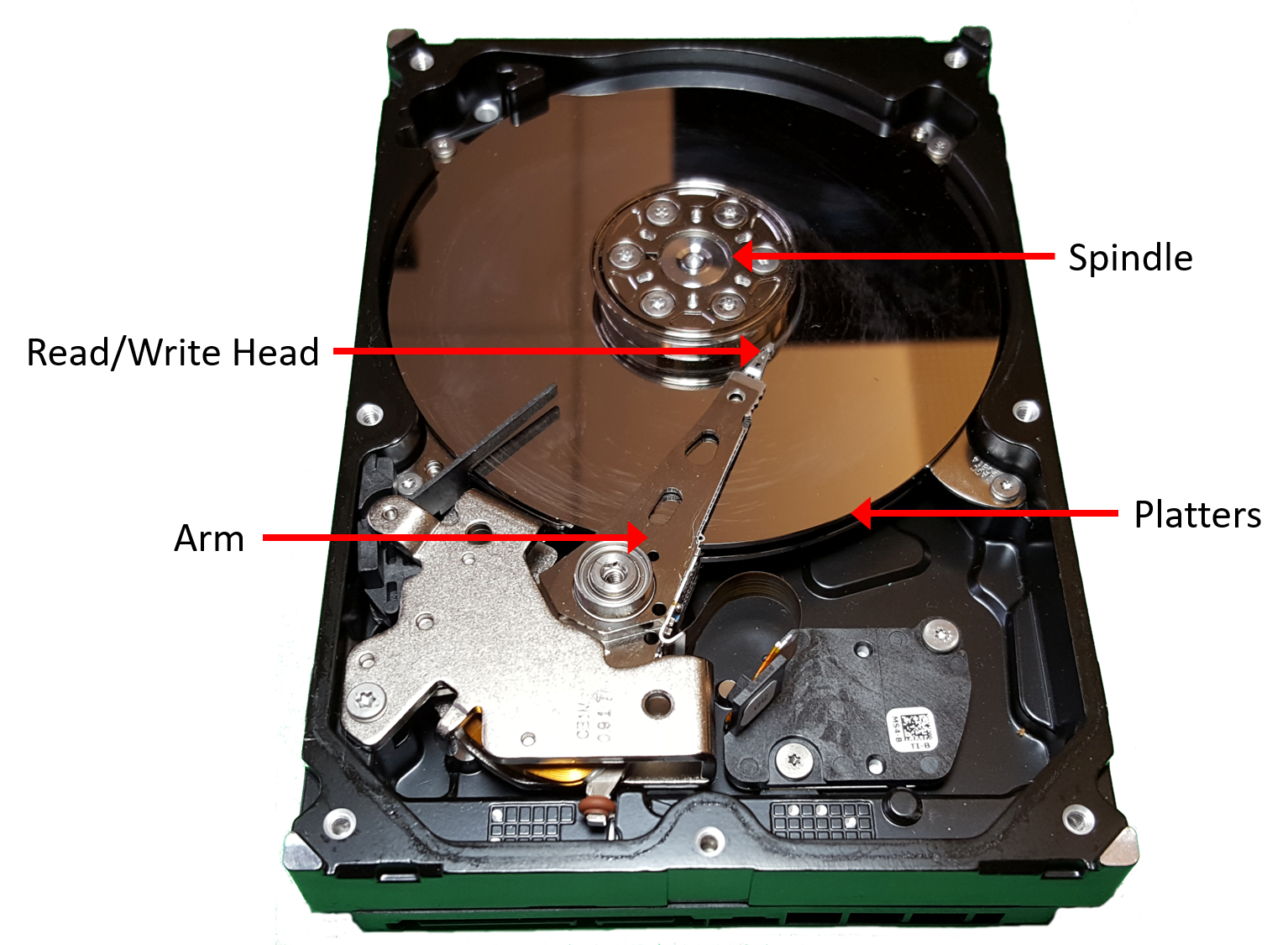

Secondary storage devices like hard disks store programs and data even when power is not actively being provided to the computer.

-

An operating system (OS) software layer lies between the hardware of the computer and the software that a user runs on the computer. The OS implements programming abstractions and interfaces that enable users to easily run and interact with programs on the system. It also manages the underlying hardware resources and controls how and when programs execute. The OS implements abstractions, policies, and mechanisms to ensure that multiple programs can simultaneously run on the system in an efficient, protected, and seamless manner.

The first four of these define the computer hardware component of a computer system. The last item (the operating system) represents the main software part of the computer system. There may be additional software layers on top of an OS that provide other interfaces to users of the system (e.g., libraries). However, the OS is the core system software that we focus on in this book.

We focus specifically on computer systems that have the following qualities:

-

They are general purpose, meaning that their function is not tailored to any specific application.

-

They are reprogrammable, meaning that they support running a different program without modifying the computer hardware or system software.

To this end, many devices that may "compute" in some form do not fall into the category of a computer system. Calculators, for example, typically have a processor, limited amounts of memory, and I/O capability. However, calculators typically do not have an operating system (advanced graphing calculators like the TI-89 are a notable exception to this rule), do not have secondary storage, and are not general purpose.

Another example that bears mentioning is the microcontroller, a type of integrated circuit that has many of the same capabilities as a computer. Microcontrollers are often embedded in other devices (such as toys, medical devices, cars, and appliances), where they control a specific automatic function. Although microcontrollers are general purpose, reprogrammable, contain a processor, internal memory, secondary storage, and are I/O capable, they lack an operating system. A microcontroller is designed to boot and run a single specific program until it loses power. For this reason, a microcontroller does not fit our definition of a computer system.

What Do Modern Computer Systems Look Like?

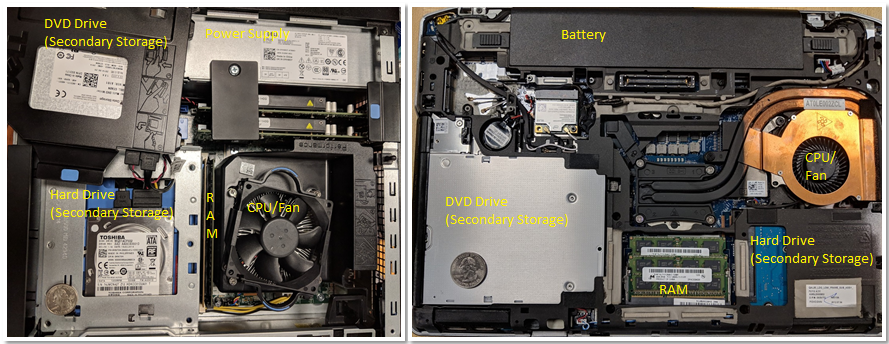

Now that we have established what a computer system is (and isn’t), let’s discuss what computer systems typically look like. Figure 2 depicts two types of computer hardware systems (excluding peripherals): a desktop computer (left) and a laptop computer (right). A U.S. quarter on each device gives the reader an idea of the size of each unit.

Notice that both contain the same hardware components, though some of the components may have a smaller form factor or be more compact. The DVD/CD bay of the desktop was moved to the side to show the hard drive underneath — the two units are stacked on top of each other. A dedicated power supply helps provide the desktop power.

In contrast, the laptop is flatter and more compact (note that the quarter in this picture appears a bit bigger). The laptop has a battery and its components tend to be smaller. In both the desktop and the laptop, the CPU is obscured by a heavyweight CPU fan, which helps keep the CPU at a reasonable operating temperature. If the components overheat, they can become permanently damaged. Both units have dual inline memory modules (DIMM) for their RAM units. Notice that laptop memory modules are significantly smaller than desktop modules.

In terms of weight and power consumption, desktop computers typically consume 100 - 400 W of power and typically weigh anywhere from 5 to 20 pounds. A laptop typically consumes 50 - 100 W of power and uses an external charger to supplement the battery as needed.

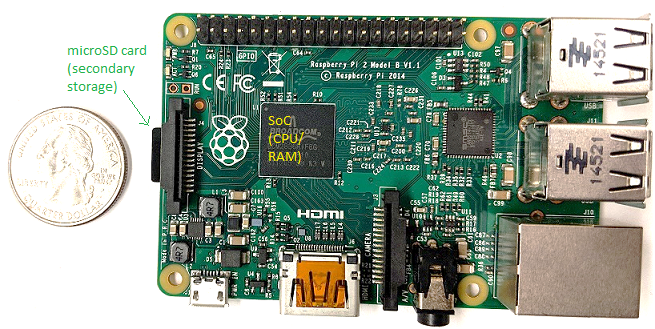

The trend in computer hardware design is toward smaller and more compact devices. Figure 3 depicts a Raspberry Pi single-board computer. A single-board computer (SBC) is a device in which the entirety of the computer is printed on a single circuit board.

The Raspberry Pi SBC contains a system-on-a-chip (SoC) processor with integrated RAM and CPU, which encompasses much of the laptop and desktop hardware shown in Figure 2. Unlike laptop and desktop systems, the Raspberry Pi is roughly the size of a credit card, weighs 1.5 ounces (about a slice of bread), and consumes about 5 W of power. The SoC technology found on the Raspberry Pi is also commonly found in smartphones. In fact, the smartphone is another example of a computer system!

Lastly, all of the aforementioned computer systems (Raspberry Pi and smartphones included) have multicore processors. In other words, their CPUs are capable of executing multiple programs simultaneously. We refer to this simultaneous execution as parallel execution. Basic multicore programming is covered in Chapter 14 of this book.

All of these different types of computer hardware systems can run one or more general purpose operating systems, such as macOS, Windows, or Unix. A general-purpose operating system manages the underlying computer hardware and provides an interface for users to run any program on the computer. Together these different types of computer hardware running different general-purpose operating systems make up a computer system.

What You Will Learn In This Book

By the end of this book, you will know the following:

How a computer runs a program: You will be able to describe, in detail, how a program expressed in a high-level programming language gets executed by the low-level circuitry of the computer hardware. Specifically, you will know:

-

how program data gets encoded into binary and how the hardware performs arithmetic on it

-

how a compiler translates C programs into assembly and binary machine code (assembly is the human-readable form of binary machine code)

-

how a CPU executes binary instructions on binary program data, from basic logic gates to complex circuits that store values, perform arithmetic, and control program execution

-

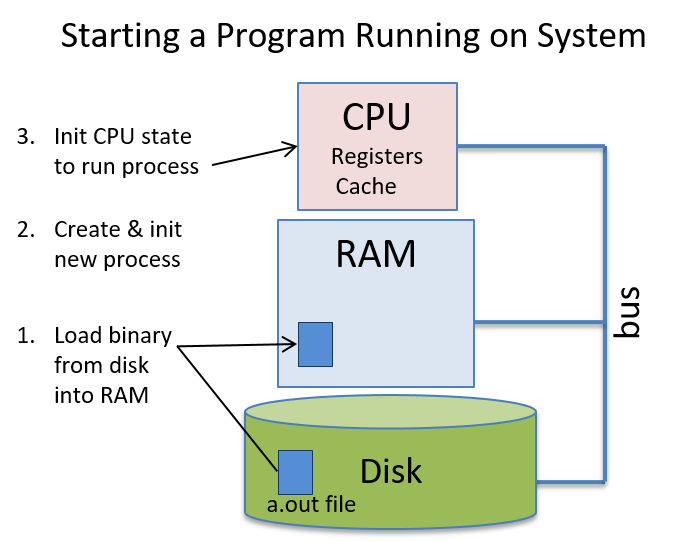

how the OS implements the interface for users to run programs on the system and how it controls program execution on the system while managing the system’s resources.

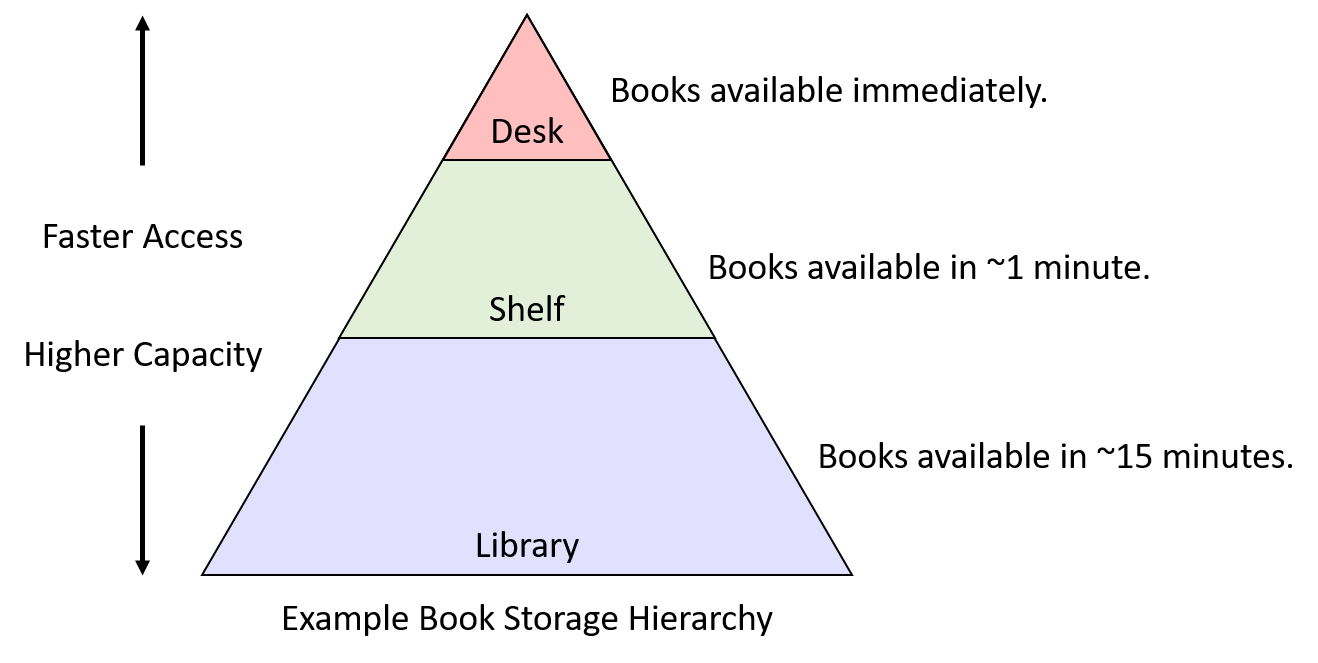

How to evaluate systems costs associated with a program’s performance: A program runs slowly for a number of reasons. It could be a bad algorithm choice or simply bad choices on how your program uses system resources. You will understand the Memory Hierarchy and its effects on program performance, and the operating systems costs associated with program performance. You will also learn some valuable tips for code optimization. Ultimately, you will be able to design programs that use system resources efficiently, and you will know how to evaluate the systems costs associated with program execution.

How to leverage the power of parallel computers with parallel programming: Taking advantage of parallel computing is important in today’s multicore world. You will learn to exploit the multiple cores on your CPU to make your program run faster. You will know the basics of multicore hardware, the OS’s thread abstraction, and issues related to multithreaded parallel program execution. You will have experience with parallel program design and writing multithreaded parallel programs using the POSIX thread library (Pthreads). You will also have an introduction to other types of parallel systems and parallel programming models.

Along the way, you will also learn many other important details about computer systems, including how they are designed and how they work. You will learn important themes in systems design and techniques for evaluating the performance of systems and programs. You’ll also master important skills, including C and assembly programming and debugging.

Getting Started with This Book

A few notes about languages, book notation, and recommendations for getting started reading this book:

Linux, C, and the GNU Compiler

We use the C programming language in examples throughout the book. C is a high-level programming language like Java and Python, but it is less abstracted from the underlying computer system than many other high-level languages. As a result, C is the language of choice for programmers who want more control over how their program executes on the computer system.

The code and examples in this book are compiled using the GNU C Compiler (GCC) and run on the Linux operating system. Although not the most common mainstream OS, Linux is the dominant OS on supercomputing systems and is arguably the most commonly used OS by computer scientists.

Linux is also free and open source, which contributes to its popular use in these settings. A working knowledge of Linux is an asset to all students in computing. Similarly, GCC is arguably the most common C compiler in use today. As a result, we use Linux and GCC in our examples. However, other Unix systems and compilers have similar interfaces and functionality.

In this book, we encourage you to type along with the listed examples. Linux commands appear in blocks like the following:

$

The $ represents the command prompt. If you see a box that looks

like

$ uname -a

this is an indication to type uname -a on the command line. Make sure that

you don’t type the $ sign!

The output of a command is usually shown directly after the command in a command line listing.

As an example, try typing in uname -a. The output of this command varies from system to

system. Sample output for a 64-bit system is shown here.

$ uname -a Linux Fawkes 4.4.0-171-generic #200-Ubuntu SMP Tue Dec 3 11:04:55 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

The uname command prints out information about a particular system. The -a flag prints out all relevant information associated with the system

in the following order:

-

The kernel name of the system (in this case Linux)

-

The hostname of the machine (e.g., Fawkes)

-

The kernel release (e.g., 4.4.0-171-generic)

-

The kernel version (e.g., #200-Ubuntu SMP Tue Dec 3 11:04:55 UTC 2019)

-

The machine hardware (e.g., x86_64)

-

The type of processor (e.g., x86_64)

-

The hardware platform (e.g., x86_64)

-

The operating system name (e.g., GNU/Linux)

You can learn more about the uname command or any other Linux command by prefacing the command with man,

as shown here:

$ man uname

This command brings up the manual page associated with the uname command. To quit out of this interface,

press the q key.

While a detailed coverage of Linux is beyond the scope of this book, readers can get a good introduction in the online Appendix 2 - Using UNIX. There are also several online resources that can give readers a good overview. One recommendation is "The Linux Command Line"1.

Other Types of Notation and Callouts

Aside from the command line and code snippets, we use several other types of "callouts" to represent content in this book.

The first is the aside. Asides are meant to provide additional context to the text, usually historical. Here’s a sample aside:

The second type of callout we use in this text is the note. Notes are used to highlight important information, such as the use of certain types of notation or suggestions on how to digest certain information. A sample note is shown below:

|

How to do the readings in this book

As a student, it is important to do the readings in the textbook. Notice that we say "do" the readings, not simply "read" the readings. To "read" a text typically implies passively imbibing words off a page. We encourage students to take a more active approach. If you see a code example, try typing it in! It’s OK if you type in something wrong, or get errors; that’s the best way to learn! In computing, errors are not failures — they are simply experience. |

The last type of callout that students should pay specific attention to is the warning. The authors use warnings to highlight things that are common "gotchas" or a common cause of consternation among our own students. Although all warnings may not be equally valuable to all students, we recommend that you review warnings to avoid common pitfalls whenever possible. A sample warning is shown here:

|

This book contains puns

The authors (especially the first author) are fond of puns and musical parodies related to computing (and not necessarily good ones). Adverse reactions to the authors' sense of humor may include (but are not limited to) eye-rolling, exasperated sighs, and forehead slapping. |

If you are ready to get started, please continue on to the first chapter as we dive into the wonderful world of C. If you already know some C programming, you may want to start with Chapter 4 on binary representation, or continue with more advanced C programming in Chapter 2.

We hope you enjoy your journey with us!

References

-

William Shotts. "The Linux Command Line", LinuxCommand.org, https://linuxcommand.org/

1. By the C, by the C, by the Beautiful C

"By the Beautiful Sea", Carroll and Atteridge, 1914

This chapter presents an overview of C programming written for students who have some experience programming in another language. It’s specifically written for Python programmers and uses a few Python examples for comparison purposes (Appendix 1 is a version of Chapter 1 for Java programmers). However, it should be useful as an introduction to C programming for anyone with basic programming experience in any language.

C is a high-level programming language like other languages you might know,

such as Python, Java, Ruby, or C++. It’s an imperative and a procedural

programming language, which means that a C program is expressed as a sequence

of statements (steps) for the computer to execute and that C programs are

structured as a set of functions (procedures). Every C program must have at

least one function, the main function, which contains the set of statements

that execute when the program begins.

The C programming language is less abstracted from the computer’s machine language than some other languages with which you might be familiar. This means that C doesn’t have support for object-oriented programming (like Python, Java, and C++) or have a rich set of high-level programming abstractions (such as strings, lists, and dictionaries in Python). As a result, if you want to use a dictionary data structure in your C program, you need to implement it yourself, as opposed to just importing the one that is part of the programming language (as in Python).

C’s lack of high-level abstractions might make it seem like a less appealing programming language to use. However, being less abstracted from the underlying machine makes C easier for a programmer to see and understand the relationship between a program’s code and the computer’s execution of it. C programmers retain more control over how their programs execute on the hardware, and they can write code that runs more efficiently than equivalent code written using the higher-level abstractions provided by other programming languages. In particular, they have more control over how their programs manage memory, which can have a significant impact on performance. Thus, C remains the de facto language for computer systems programming where low-level control and efficiency are crucial.

We use C in this book because of its expressiveness of program control and its relatively straightforward translation to assembly and machine code that a computer executes. This chapter introduces programming in C, beginning with an overview of its features. Chapter 2 then describes C’s features in more detail.

1.1. Getting Started Programming in C

Let’s start by looking at a "hello world" program that includes an example of

calling a function from the math library. In Table 1 we compare the C

version of this program to the Python version. The C version might be put in a

file named hello.c (.c is the suffix convention for C source code files),

whereas the Python version might be in a file named hello.py.

| Python version (hello.py) | C version (hello.c) |

|---|---|

|

|

Notice that both versions of this program have similar structure and language constructs, albeit with different language syntax. In particular:

Comments:

-

In Python, multiline comments begin and end with

''', and single-line comments begin with#. -

In C, multiline comments begin with

/*and end with*/, and single-line comments begin with//.

Importing library code:

-

In Python, libraries are included (imported) using

import. -

In C, libraries are included (imported) using

#include. All#includestatements appear at the top of the program, outside of function bodies.

Blocks:

-

In Python, indentation denotes a block.

-

In C, blocks (for example, function, loop, and conditional bodies) start with

{and end with}.

The main function:

-

In Python,

def main():defines the main function. -

In C,

int main(void){ }defines the main function. Themainfunction returns a value of typeint, which is C’s name for specifying the signed integer type (signed integers are values like -3, 0, 1234). Themainfunction returns theintvalue 0 to signify running to completion without error. Thevoidmeans it doesn’t expect to receive a parameter. Future sections show howmaincan take parameters to receive command line arguments.

Statements:

-

In Python, each statement is on a separate line.

-

In C, each statement ends with a semicolon

;. In C, statements must be within the body of some function (inmainin this example).

Output:

-

In Python, the

printfunction prints a formatted string. Values for the placeholders in the format string follow a%symbol in a comma-separated list of values (for example, the value ofsqrt(4)will be printed in place of the%fplaceholder in the format string). -

In C, the

printffunction prints a formatted string. Values for the placeholders in the format string are additional arguments separated by commas (for example, the value ofsqrt(4)will be printed in place of the%fplaceholder in the format string).

There are a few important differences to note in the C and Python versions of this program:

Indentation: In C, indentation doesn’t have meaning, but it’s good programming style to indent statements based on the nested level of their containing block.

Output: C’s printf function doesn’t automatically print a newline character

at the end like Python’s print function does. As a result, C programmers

need to explicitly specify a newline character (\n) in the format string when

a newline is desired in the output.

main function:

-

A C program must have a function named

main, and its return type must beint. This means that themainfunction returns a signed integer type value. Python programs don’t need to name their main functionmain, but they often do by convention. -

The C

mainfunction has an explicitreturnstatement to return anintvalue (by convention,mainshould return0if the main function is successfully executed without errors). -

A Python program needs to include an explicit call to its

mainfunction to run it when the program executes. In C, itsmainfunction is automatically called when the C program executes.

1.1.1. Compiling and Running C Programs

Python is an interpreted programming language, which means that another

program, the Python interpreter, runs Python programs: the Python interpreter

acts like a virtual machine on which Python programs are run. To run a Python

program, the program source code (hello.py) is given as input to the Python

interpreter program that runs it. For example ($ is the Linux shell prompt):

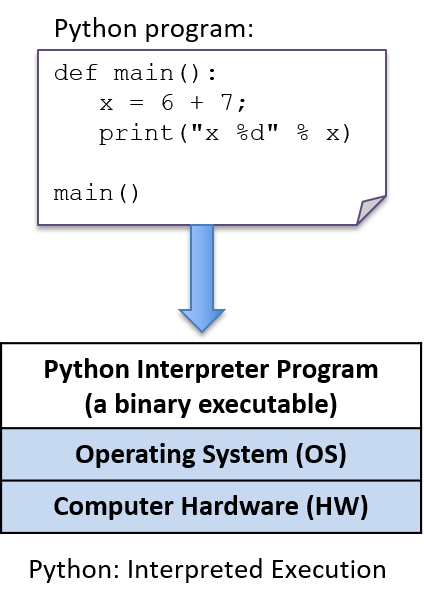

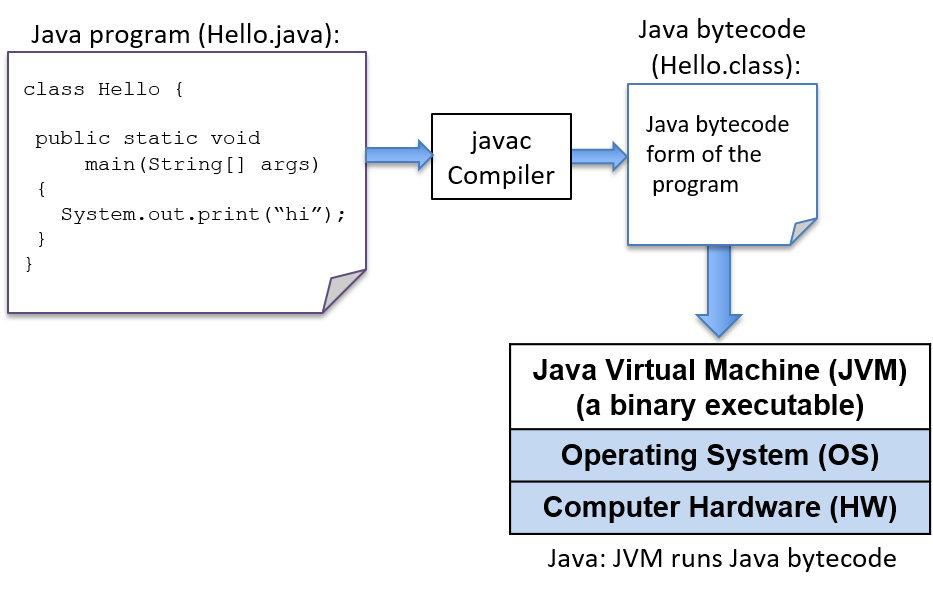

$ python hello.pyThe Python interpreter is a program that is in a form that can be run directly on the underlying system (this form is called binary executable) and takes as input the Python program that it runs (Figure 4).

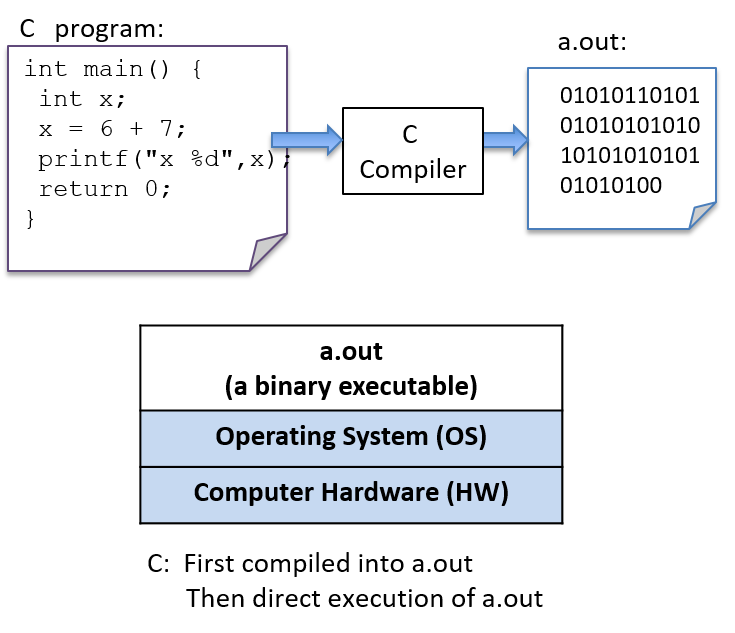

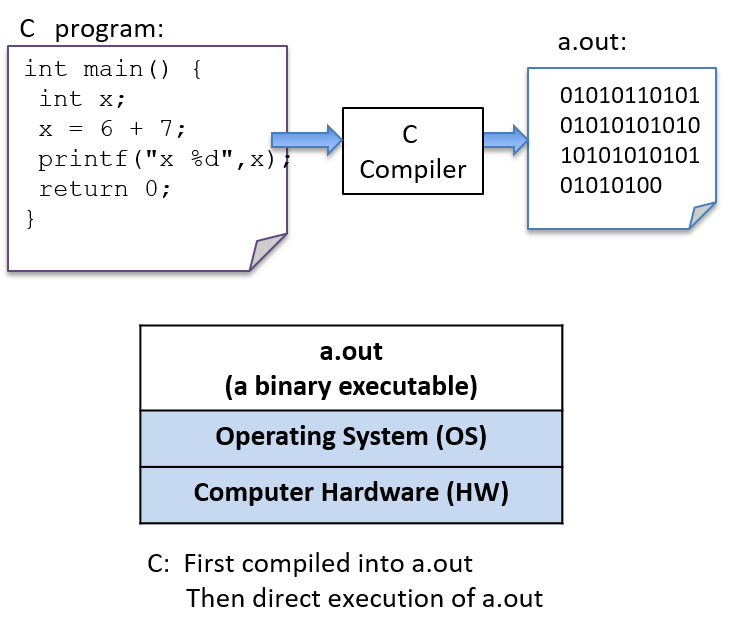

To run a C program, it must first be translated into a form that a computer system can directly execute. A C compiler is a program that translates C source code into a binary executable form that the computer hardware can directly execute. A binary executable consists of a series of 0’s and 1’s in a well-defined format that a computer can run.

For example, to run the C program hello.c on a Unix system, the C code must

first be compiled by a C compiler (for example, the GNU C

compiler, GCC) that produces a binary executable (by default named a.out).

The binary executable version of the program can then be run directly on the

system (Figure 5):

$ gcc hello.c

$ ./a.out(Note that some C compilers might need to be explicitly told to link in the math

library: -lm):

$ gcc hello.c -lm

Detailed Steps

In general, the following sequence describes the necessary steps for editing, compiling, and running a C program on a Unix system:

-

Using a text editor (for example,

vim), write and save your C source code program in a file (e.g.,hello.c):$ vim hello.c

-

Compile the source to an executable form, and then run it. The most basic syntax for compiling with

gccis:$ gcc <input_source_file>

If compilation yields no errors, the compiler creates a binary executable file named a.out. The compiler also allows you to specify the name of the binary executable file to generate using the -o flag:

$ gcc -o <output_executable_file> <input_source_file>

For example, this command instructs gcc to compile hello.c into an

executable file named hello:

$ gcc -o hello hello.c

We can invoke the executable program using ./hello:

$ ./hello

Any changes made to the C source code (the hello.c file) must be recompiled

with gcc to produce a new version of hello. If the compiler detects any

errors during compilation, the ./hello file won’t be created/re-created (but

beware, an older version of the file from a previous successful compilation might

still exist).

Often when compiling with gcc, you want to include several command line

options. For example, these options enable more compiler warnings and build a

binary executable with extra debugging information:

$ gcc -Wall -g -o hello hello.c

Because the gcc command line can be long, frequently the make utility is

used to simplify compiling C programs and for cleaning up files created by

gcc.

Using make

and writing Makefiles are important skills that you will develop as you build

up experience with C programming.

We cover compiling and linking with C library code in more detail at the end of Chapter 2.

1.1.2. Variables and C Numeric Types

Like Python, C uses variables as named storage locations for holding data. Thinking about the scope and type of program variables is important to understand the semantics of what your program will do when you run it. A variable’s scope defines when the variable has meaning (that is, where and when in your program it can be used) and its lifetime (that is, it could persist for the entire run of a program or only during a function activation). A variable’s type defines the range of values that it can represent and how those values will be interpreted when performing operations on its data.

In C, all variables must be declared before they can be used. To declare a variable, use the following syntax:

type_name variable_name;A variable can have only a single type. The basic C types include char,

int, float, and double. By convention, C variables should be declared at

the beginning of their scope (at the top of a { } block), before any C

statements in that scope.

Below is an example C code snippet that shows declarations and uses of variables of some different types. We discuss types and operators in more detail after the example.

{

/* 1. Define variables in this block's scope at the top of the block. */

int x; // declares x to be an int type variable and allocates space for it

int i, j, k; // can define multiple variables of the same type like this

char letter; // a char stores a single-byte integer value

// it is often used to store a single ASCII character

// value (the ASCII numeric encoding of a character)

// a char in C is a different type than a string in C

float winpct; // winpct is declared to be a float type

double pi; // the double type is more precise than float

/* 2. After defining all variables, you can use them in C statements. */

x = 7; // x stores 7 (initialize variables before using their value)

k = x + 2; // use x's value in an expression

letter = 'A'; // a single quote is used for single character value

letter = letter + 1; // letter stores 'B' (ASCII value one more than 'A')

pi = 3.1415926;

winpct = 11 / 2.0; // winpct gets 5.5, winpct is a float type

j = 11 / 2; // j gets 5: int division truncates after the decimal

x = k % 2; // % is C's mod operator, so x gets 9 mod 2 (1)

}Note the semicolons galore. Recall that C statements are delineated by ;,

not line breaks — C expects a semicolon after every statement. You’ll forget

some, and gcc almost never informs you that you missed a semicolon, even

though that might be the only syntax error in your program. In fact, often

when you forget a semicolon, the compiler indicates a syntax error on the

line after the one with the missing semicolon: the reason is that gcc

interprets it as part of the statement from the previous line. As you continue

to program in C, you’ll learn to correlate gcc errors with the specific C

syntax mistakes that they describe.

1.1.3. C Types

C supports a small set of built-in data types, and it provides a few ways in which programmers can construct basic collections of types (arrays and structs). From these basic building blocks, a C programmer can build complex data structures.

C defines a set of basic types for storing numeric values. Here are some examples of numeric literal values of different C types:

8 // the int value 8

3.4 // the double value 3.4

'h' // the char value 'h' (its value is 104, the ASCII value of h)The C char type stores a numeric value. However, it’s often used by

programmers to store the value of an ASCII character. A character literal

value is specified in C as a single character between single quotes.

C doesn’t support a string type, but programmers can create strings from the

char type and C’s support for constructing arrays of values, which we discuss

in later sections. C does, however, support a way of expressing string literal

values in programs: a string literal is any sequence of characters between

double quotes. C programmers often pass string literals as the format string

argument to printf:

printf("this is a C string\n");Python supports strings, but it doesn’t have a char type. In C, a string and

a char are two very different types, and they evaluate differently. This

difference is illustrated by contrasting a C string literal that contains one

character with a C char literal. For example:

'h' // this is a char literal value (its value is 104, the ASCII value of h)

"h" // this is a string literal value (its value is NOT 104, it is not a char)We discuss C strings and char variables in more detail in the

Strings section later in

this chapter. Here, we’ll mainly focus on C’s numeric types.

C Numeric Types

C supports several different types for storing numeric values. The types

differ in the format of the numeric values they represent. For example, the

float and double types can represent real values, int represents signed

integer values, and unsigned int represents unsigned integer values. Real

values are positive or negative values with a decimal point, such as -1.23 or

0.0056. Signed integers store positive, negative, or zero integer values,

such as -333, 0, or 3456. Unsigned integers store strictly nonnegative

integer values, such as 0 or 1234.

C’s numeric types also differ in the range and precision of the values they can represent. The range or precision of a value depends on the number of bytes associated with its type. Types with more bytes can represent a larger range of values (for integer types), or higher-precision values (for real types), than types with fewer bytes.

Table 2 shows the number of storage bytes, the kind of numeric values stored, and how to declare a variable for a variety of common C numeric types (note that these are typical sizes — the exact number of bytes depends on the hardware architecture).

| Type name | Usual size | Values stored | How to declare |

|---|---|---|---|

|

1 byte |

integers |

|

|

2 bytes |

signed integers |

|

|

4 bytes |

signed integers |

|

|

4 or 8 bytes |

signed integers |

|

|

8 bytes |

signed integers |

|

|

4 bytes |

signed real numbers |

|

|

8 bytes |

signed real numbers |

|

C also provides unsigned versions of the integer numeric types (char,

short, int, long, and long long). To declare a variable as unsigned,

add the keyword unsigned before the type name. For example:

int x; // x is a signed int variable

unsigned int y; // y is an unsigned int variableThe C standard doesn’t specify whether the char type is signed or unsigned.

As a result, some implementations might implement char as signed integer values

and others as unsigned. It’s good programming practice to explicitly declare

unsigned char if you want to use the unsigned version of a char variable.

The exact number of bytes for each of the C types might vary from one

architecture to the next. The sizes in Table 2 are minimum (and

common) sizes for each type. You can print the exact size on a given machine

using C’s sizeof operator, which takes the name of a type as an argument and

evaluates to the number of bytes used to store that type. For example:

printf("number of bytes in an int: %lu\n", sizeof(int));

printf("number of bytes in a short: %lu\n", sizeof(short));The sizeof operator evaluates to an unsigned long value, so in the call to

printf, use the placeholder %lu to print its value. On most architectures

the output of these statements will be:

number of bytes in an int: 4 number of bytes in a short: 2

Arithmetic Operators

Arithmetic operators combine values of numeric types. The resulting type of

the operation is based on the types of the operands. For example, if two int

values are combined with an arithmetic operator, the resulting type is also an

integer.

C performs automatic type conversion when an operator combines operands of

two different types. For example, if an int operand is combined with

a float operand, the integer operand is first converted to its floating-point

equivalent before the operator is applied, and the type of the operation’s result

is float.

The following arithmetic operators can be used on most numeric type operands:

-

add (

+) and subtract (-) -

multiply (

*), divide (/), and mod (%):The mod operator (

%) can only take integer-type operands (int,unsigned int,short, and so on).If both operands are

inttypes, the divide operator (/) performs integer division (the resulting value is anint, truncating anything beyond the decimal point from the division operation). For example8/3evaluates to2.If one or both of the operands are

float(ordouble),/performs real division and evaluates to afloat(ordouble) result. For example,8 / 3.0evaluates to approximately2.666667. -

assignment (

=):variable = value of expression; // e.g., x = 3 + 4;

-

assignment with update (

+=,-=,*=,/=, and%=):variable op= expression; // e.g., x += 3; is shorthand for x = x + 3;

-

increment (

++) and decrement (--):variable++; // e.g., x++; assigns to x the value of x + 1

|

Pre- vs. Post-increment

The operators

In many cases, it doesn’t matter which you use because the value of the incremented or decremented variable isn’t being used in the statement. For example, these two statements are equivalent (although the first is the most commonly used syntax for this statement): In some cases, the context affects the outcome (when the value of the incremented or decremented variable is being used in the statement). For example: Code like the preceding example that uses an arithmetic expression with an

increment operator is often hard to read, and it’s easy to get wrong. As a

result, it’s generally best to avoid writing code like this; instead, write

separate statements for exactly the order you want. For example, if you want

to first increment Instead of writing this: write it as two separate statements: |

1.2. Input/Output (printf and scanf)

C’s printf function prints values to the terminal, and the scanf function

reads in values entered by a user. The printf and scanf functions belong

to C’s standard I/O library, which needs to be explicitly included at the top

of any .c file that uses these functions by using #include <stdio.h>. In

this section, we introduce the basics of using printf and scanf in C

programs. The "I/O" section in Chapter 2 discusses

C’s input and output functions in more detail.

1.2.1. printf

C’s printf function is very similar to formatted print in Python, where the

caller specifies a format string to print. The format string often contains

formatting specifiers, such as special characters that will print tabs (\t)

or newlines (\n), or placeholders for values in the output. Placeholders

consist of % followed by a type specifier letter (for example, %d

represents a placeholder for an integer value). For each placeholder in the

format string, printf expects an additional argument. Table 3

contains an example program in Python and C with formatted output:

| Python version | C version |

|---|---|

|

|

When run, both versions of this program produce identically formatted output:

Name: Vijay, Info: Age: 20 Ht: 5.9 Year: 3 Dorm: Alice Paul

The main difference between C’s printf and Python’s print functions are

that the Python version implicitly prints a newline character at the end of the

output string, but the C version does not. As a result, the C format strings in

this example have newline (\n) characters at the end to explicitly print a

newline character. The syntax for listing the argument values for the

placeholders in the format string is also slightly different in C’s printf

and Python’s print functions.

C uses the same formatting placeholders as Python for specifying different types of values. The preceding example demonstrates the following formatting placeholders:

%g: placeholder for a float (or double) value %d: placeholder for a decimal value (int, short, char) %s: placeholder for a string value

C additionally supports the %c placeholder for printing a character value.

This placeholder is useful when a programmer wants to print the ASCII character

associated with a particular numeric encoding. Here’s a C code snippet that

prints a char as its numeric value (%d) and as its character encoding

(%c):

// Example printing a char value as its decimal representation (%d)

// and as the ASCII character that its value encodes (%c)

char ch;

ch = 'A';

printf("ch value is %d which is the ASCII value of %c\n", ch, ch);

ch = 99;

printf("ch value is %d which is the ASCII value of %c\n", ch, ch);When run, the program’s output looks like this:

ch value is 65 which is the ASCII value of A ch value is 99 which is the ASCII value of c

1.2.2. scanf

C’s scanf function represents one method for reading in values entered by the

user (via the keyboard) and storing them in program variables. The scanf

function can be a bit picky about the exact format in which the user enters

data, which means that it’s not very robust to badly formed user input. In the

"I/O" section in Chapter 2, we discuss more robust

ways of reading input values from the user. For now, remember that if your

program gets into an infinite loop due to badly formed user input, you can

always press CTRL-C to terminate it.

Reading input is handled differently in Python and C: Python uses the input

function to read in a value as a string, and then the program converts the

string value to an int, whereas C uses scanf to read in an int value and to

store it at the location in memory of an int program variable (for example,

&num1). Table 4 displays example programs for reading user

input values in Python and C:

| Python version | C version |

|---|---|

|

|

When run, both programs read in two values (here, 30 and 67):

Enter a number: 30 Enter another: 67 30 + 67 = 97

Like printf, scanf takes a format string that specifies the number and types

of values to read in (for example, "%d" specifies one int value). The

scanf function skips over leading and trailing whitespace as it reads in a

numeric value, so its format string only needs to contain a sequence of

formatting placeholders, usually with no whitespace or other formatting

characters between the placeholders in its format string. The arguments for

the placeholders in the format string specify the locations of program

variables into which the values read in will be stored. Prefixing the name of

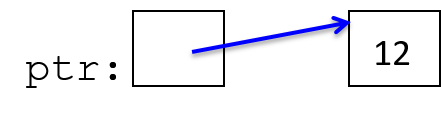

a variable with the & operator produces the location of that variable in the

program’s memory — the memory address of the variable. The

"Pointers" section in Chapter 2

discusses the & operator in more detail. For now, we use it only in the

context of the scanf function.

Here’s another scanf example, in which the format string has placeholders

for two values, the first an int and the second a float:

int x;

float pi;

// read in an int value followed by a float value ("%d%g")

// store the int value at the memory location of x (&x)

// store the float value at the memory location of pi (&pi)

scanf("%d%g", &x, &pi);When inputting data to a program via scanf, individual numeric input values

must be separated by at least one whitespace character. However, because

scanf skips over additional leading and trailing whitespace characters (for

example, spaces, tabs, and newlines), a user could enter input values with any

amount of space before or after each input value. For instance, if a user

enters the following for the call to scanf in the preceding example, scanf

will read in 8 and store it in the x variable, and then read in 3.14 and

store it in the pi variable:

8 3.14

1.3. Conditionals and Loops

Table 5 shows that the syntax and semantics of if-else

statements in C and Python are very similar. The main syntactic difference is

that Python uses indentation to indicate "body" statements, whereas C uses

curly braces (but you should still use good indentation in your C code).

| Python version | C version |

|---|---|

|

|

The Python and C syntax for if-else statements is almost identical with

only minor differences. In both, the else part is optional. Python and C

also support multiway branching by chaining if and else if statements. The

following describes the full if-else C syntax:

// a one-way branch:

if ( <boolean expression> ) {

<true body>

}

// a two-way branch:

if ( <boolean expression> ) {

<true body>

}

else {

<false body>

}

// a multibranch (chaining if-else if-...-else)

// (has one or more 'else if' following the first if):

if ( <boolean expression 1> ) {

<true body>

}

else if ( <boolean expression 2> ) {

// first expression is false, second is true

<true 2 body>

}

else if ( <boolean expression 3> ) {

// first and second expressions are false, third is true

<true 3 body>

}

// ... more else if's ...

else if ( <boolean expression N> ) {

// first N-1 expressions are false, Nth is true

<true N body>

}

else { // the final else part is optional

// if all previous expressions are false

<false body>

}1.3.1. Boolean Values in C

C doesn’t provide a Boolean type with true or false values. Instead, integer values evaluate to true or false when used in conditional statements. When used in conditional expressions, any integer expression that is:

-

zero (0) evaluates to false

-

nonzero (any positive or negative value) evaluates to true

C has a set of relational and logical operators for Boolean expressions.

The relational operators take operand(s) of the same type and evaluate to zero (false) or nonzero (true). The set of relational operators are:

-

equality (

==) and inequality (not equal,!=) -

comparison operators: less than (

<), less than or equal (<=), greater than (>), and greater than or equal (>=)

Here are some C code snippets showing examples of relational operators:

// assume x and y are ints, and have been assigned

// values before this point in the code

if (y < 0) {

printf("y is negative\n");

} else if (y != 0) {

printf("y is positive\n");

} else {

printf("y is zero\n");

}

// set x and y to the larger of the two values

if (x >= y) {

y = x;

} else {

x = y;

}C’s logical operators take integer "Boolean" operand(s) and evaluate to either zero (false) or nonzero (true). The set of logical operators are:

-

logical negation (

!) -

logical and (

&&): stops evaluating at the first false expression (short-circuiting) -

logical or (

||): stops evaluating at the first true expression (short-circuiting)

C’s short-circuit logical operator evaluation stops evaluating a logical

expression as soon as the result is known. For example, if the first operand

to a logical and (&&) expression evaluates to false, the result of the &&

expression must be false. As a result, the second operand’s value need not be

evaluated, and it is not evaluated.

The following is an example of conditional statements in C that use logical operators (it’s always best to use parentheses around complex Boolean expressions to make them easier to read):

if ( (x > 10) && (y >= x) ) {

printf("y and x are both larger than 10\n");

x = 13;

} else if ( ((-x) == 10) || (y > x) ) {

printf("y might be bigger than x\n");

x = y * x;

} else {

printf("I have no idea what the relationship between x and y is\n");

}1.3.2. Loops in C

Like Python, C supports for and while loops. Additionally, C provides

do-while loops.

while Loops

The while loop syntax in C and Python is almost identical, and the behavior

is the same. Table 6 shows example programs of while loops

in C and Python.

| Python version | C version |

|---|---|

|

|

The while loop syntax in C is very similar in Python, and both are evaluated

in the same way:

while ( <boolean expression> ) {

<true body>

}The while loop checks the Boolean expression first and executes the body if

true. In the preceding example program, the value of the val variable will be

repeatedly printed in the while loop until its value is greater than the

value of the num variable. If the user enters 10, the C and Python

programs will print:

1 2 4 8

C also has a do-while loop that is similar to its while loop, but

it executes the loop body first and then checks a condition and repeats

executing the loop body for as long as the condition is true. That is,

a do-while loop will always execute the loop body at least one time:

do {

<body>

} while ( <boolean expression> );For additional while loop examples, try these two programs:

for Loops

The for loop is different in C than it is in Python. In Python, for loops

are iterations over sequences, whereas in C, for loops are more general

looping constructs. Table 7 shows example programs that use for

loops to print all the values between 0 and a user-provided input number:

| Python version | C version |

|---|---|

|

|

In this example, you can see that the C for loop syntax is quite different

from the Python for loop syntax. It’s also evaluated differently.

The C for loop syntax is:

for ( <initialization>; <boolean expression>; <step> ) {

<body>

}The for loop evaluation rules are:

-

Evaluate initialization one time when first entering the loop.

-

Evaluate the boolean expression. If it’s 0 (false), drop out of the

forloop (that is, the program is done repeating the loop body statements). -

Evaluate the statements inside the loop body.

-

Evaluate the step expression.

-

Repeat from step (2).

Here’s a simple example for loop to print the values 0, 1, and 2:

int i;

for (i = 0; i < 3; i++) {

printf("%d\n", i);

}Executing the for loop evaluation rules on the preceding loop yields the

following sequence of actions:

(1) eval init: i is set to 0 (i=0) (2) eval bool expr: i < 3 is true (3) execute loop body: print the value of i (0) (4) eval step: i is set to 1 (i++) (2) eval bool expr: i < 3 is true (3) execute loop body: print the value of i (1) (4) eval step: i is set to 2 (i++) (2) eval bool expr: i < 3 is true (3) execute loop body: print the value of i (2) (4) eval step: i is set to 3 (i++) (2) eval bool expr: i < 3 is false, drop out of the for loop

The following program shows a more complicated for loop example (it’s also

available to download). Note that just

because C supports for loops with a list of statements for its

initialization and step parts, it’s best to keep it simple (this example

illustrates a more complicated for loop syntax, but the for loop would be

easier to read and understand if it were simplified by moving the j += 10 step

statement to the end of the loop body and having just a single step statement,

i += 1).

/* An example of a more complex for loop which uses multiple variables.

* (it is unusual to have for loops with multiple statements in the

* init and step parts, but C supports it and there are times when it

* is useful...don't go nuts with this just because you can)

*/

#include <stdio.h>

int main(void) {

int i, j;

for (i=0, j=0; i < 10; i+=1, j+=10) {

printf("i+j = %d\n", i+j);

}

return 0;

}

// the rules for evaluating a for loop are the same no matter how

// simple or complex each part is:

// (1) evaluate the initialization statements once on the first

// evaluation of the for loop: i=0 and j=0

// (2) evaluate the boolean condition: i < 10

// if false (when i is 10), drop out of the for loop

// (3) execute the statements inside the for loop body: printf

// (4) evaluate the step statements: i += 1, j += 10

// (5) repeat, starting at step (2)In C, for loops and while loops are equivalent in power, meaning that any

while loop can be expressed as a for loop, and vice versa. The same is not true

in Python, where for loops are iterations over a sequence of values. As

such, they cannot express some looping behavior that the more general Python

while loop can express. Indefinite loops are one example that can only be

written as a while loop in Python.

Consider the following while loop in C:

int guess = 0;

while (guess != num) {

printf("%d is not the right number\n", guess);

printf("Enter another guess: ");

scanf("%d", &guess);

}This loop can be translated to an equivalent for loop in C:

int guess;

for (guess = 0; guess != num; ) {

printf("%d is not the right number\n", guess);

printf("Enter another guess: ");

scanf("%d", &guess);

}In Python, however, this type of looping behavior can be expressed only by

using a while loop.

Because for and while loops are equally expressive in C, only one looping

construct is needed in the language. However, for loops are a more natural

language construct for definite loops (like iterating over a range of values),

whereas while loops are a more natural language construct for indefinite loops

(like repeating until the user enters an even number). As a result, C provides

both to programmers.

1.4. Functions

Functions break code into manageable pieces and reduce code duplication. Functions might take zero or more parameters as input and they return a single value of a specific type. A function declaration or prototype specifies the function’s name, its return type, and its parameter list (the number and types of all the parameters). A function definition includes the code to be executed when the function is called. All functions in C must be declared before they’re called. This can be done by declaring a function prototype or by fully defining the function before calling it:

// function definition format:

// ---------------------------

<return type> <function name> (<parameter list>)

{

<function body>

}

// parameter list format:

// ---------------------

<type> <param1 name>, <type> <param2 name>, ..., <type> <last param name>

Here’s an example function definition. Note that the comments describe what the function does, the details of each parameter (what it’s used for and what it should be passed), and what the function returns:

/* This program computes the larger of two

* values entered by the user.

*/

#include <stdio.h>

/* max: computes the larger of two integer values

* x: one integer value

* y: the other integer value

* returns: the larger of x and y

*/

int max(int x, int y) {

int bigger;

bigger = x;

if (y > x) {

bigger = y;

}

printf(" in max, before return x: %d y: %d\n", x, y);

return bigger;

}Functions that don’t return a value should specify the void return type.

Here’s an example of a void function:

/* prints out the squares from start to stop

* start: the beginning of the range

* stop: the end of the range

*/

void print_table(int start, int stop) {

int i;

for (i = start; i <= stop; i++) {

printf("%d\t", i*i);

}

printf("\n");

}As in any programming language that supports functions or procedures, a function call invokes a function, passing specific argument values for the particular call. A function is called by its name and is passed arguments, with one argument for each corresponding function parameter. In C, calling a function looks like this:

// function call format:

// ---------------------

function_name(<argument list>);

// argument list format:

// ---------------------

<argument 1 expression>, <argument 2 expression>, ..., <last argument expression>Arguments to C functions are passed by value: each function parameter is assigned the value of the corresponding argument passed to it in the function call by the caller. Pass by value semantics mean that any change to a parameter’s value in the function (that is, assigning a parameter a new value in the function) is not visible to the caller.

Here are some example function calls to the max and print_table functions

listed earlier:

int val1, val2, result;

val1 = 6;

val2 = 10;

/* to call max, pass in two int values, and because max returns an

int value, assign its return value to a local variable (result)

*/

result = max(val1, val2); /* call max with argument values 6 and 10 */

printf("%d\n", result); /* prints out 10 */

result = max(11, 3); /* call max with argument values 11 and 3 */

printf("%d\n", result); /* prints out 11 */

result = max(val1 * 2, val2); /* call max with argument values 12 and 10 */

printf("%d\n", result); /* prints out 12 */

/* print_table does not return a value, but takes two arguments */

print_table(1, 20); /* prints a table of values from 1 to 20 */

print_table(val1, val2); /* prints a table of values from 6 to 10 */Here is another example of a full program that shows a call to a

slightly different implementation of the max function that has an

additional statement to change the value of its parameter (x = y):

/* max: computes the larger of two int values

* x: one value

* y: the other value

* returns: the larger of x and y

*/

int max(int x, int y) {

int bigger;

bigger = x;

if (y > x) {

bigger = y;

// note: changing the parameter x's value here will not

// change the value of its corresponding argument

x = y;

}

printf(" in max, before return x: %d y: %d\n", x, y);

return bigger;

}

/* main: shows a call to max */

int main(void) {

int a, b, res;

printf("Enter two integer values: ");

scanf("%d%d", &a, &b);

res = max(a, b);

printf("The larger value of %d and %d is %d\n", a, b, res);

return 0;

}The following output shows what two runs of this program might look like. Note

the difference in the parameter x’s value (printed from inside the `max

function) in the two runs. Specifically, notice that changing the value of

parameter x in the second run does not affect the variable that was passed

in as an argument to max after the call returns:

$ ./a.out Enter two integer values: 11 7 in max, before return x: 11 y: 7 The larger value of 11 and 7 is 11 $ ./a.out Enter two integer values: 13 100 in max, before return x: 100 y: 100 The larger value of 13 and 100 is 100

Because arguments are passed by value to functions, the preceding version of

the max function that changes one of its parameter values behaves identically

to the original version of max that does not.

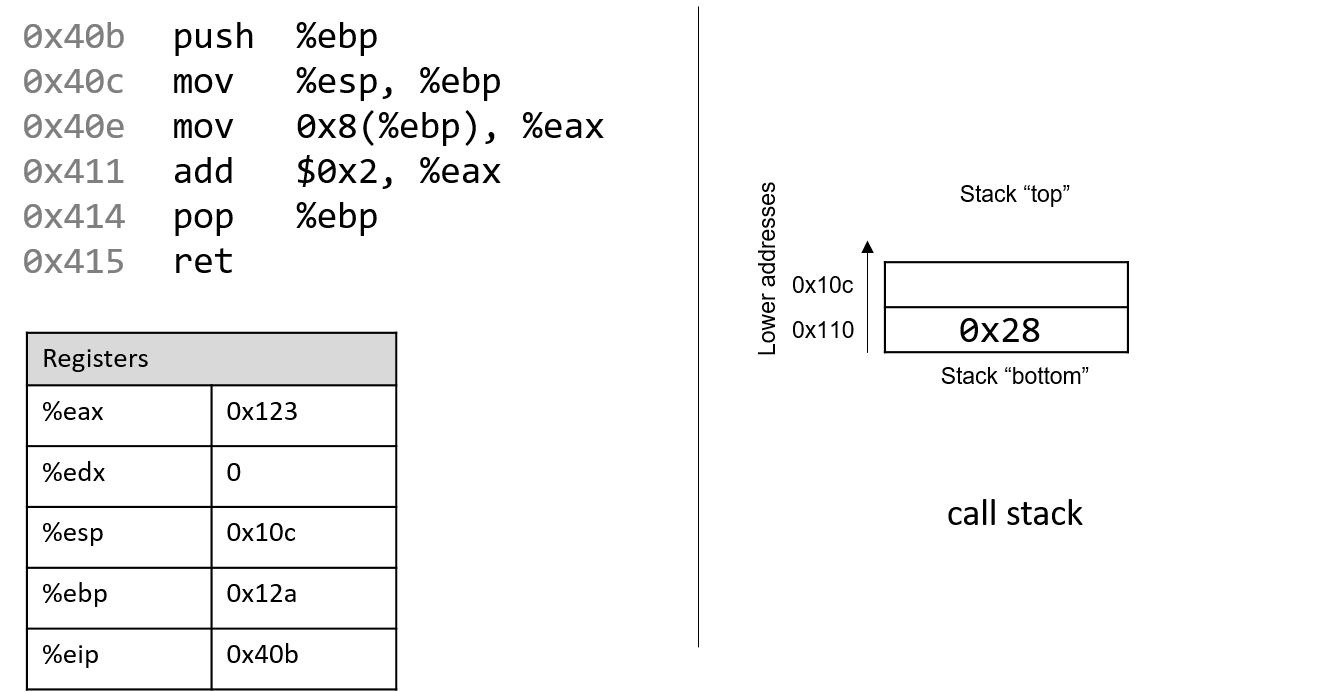

1.4.1. The Stack

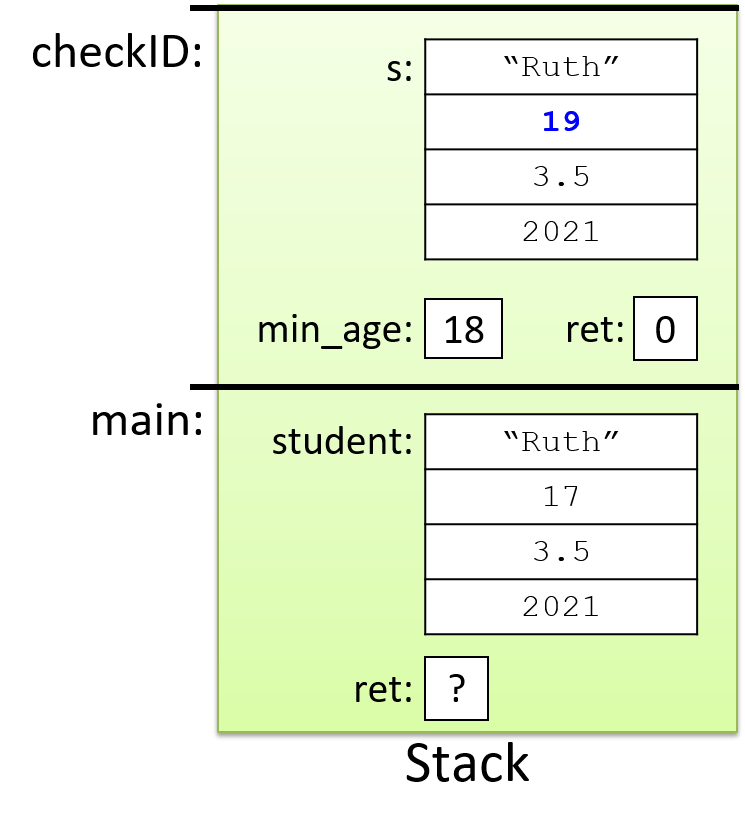

The execution stack keeps track of the state of active functions in a program. Each function call creates a new stack frame (sometimes called an activation frame or activation record) containing its parameter and local variable values. The frame on the top of the stack is the active frame; it represents the function activation that is currently executing, and only its local variables and parameters are in scope. When a function is called, a new stack frame is created for it (pushed on the top of the stack), and space for its local variables and parameters is allocated in the new frame. When a function returns, its stack frame is removed from the stack (popped from the top of the stack), leaving the caller’s stack frame on the top of the stack.

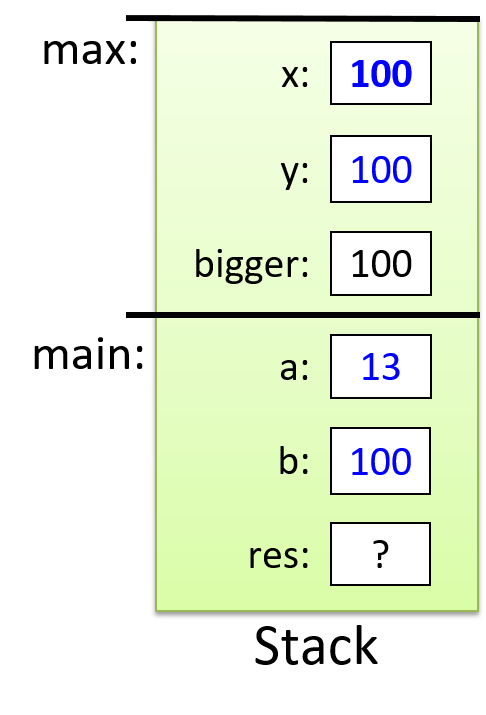

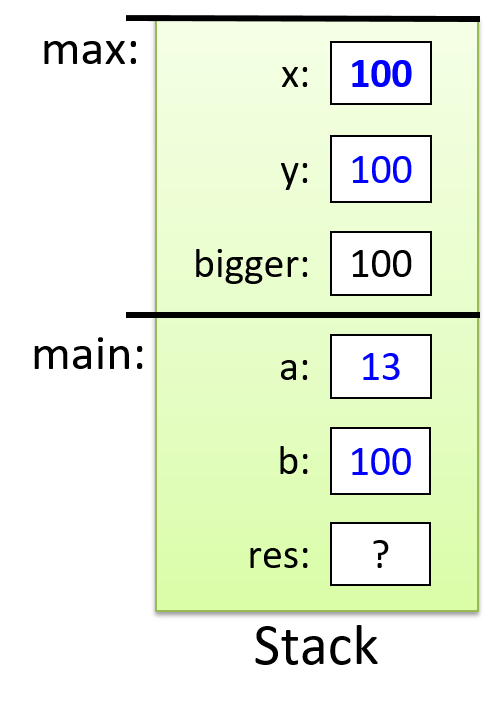

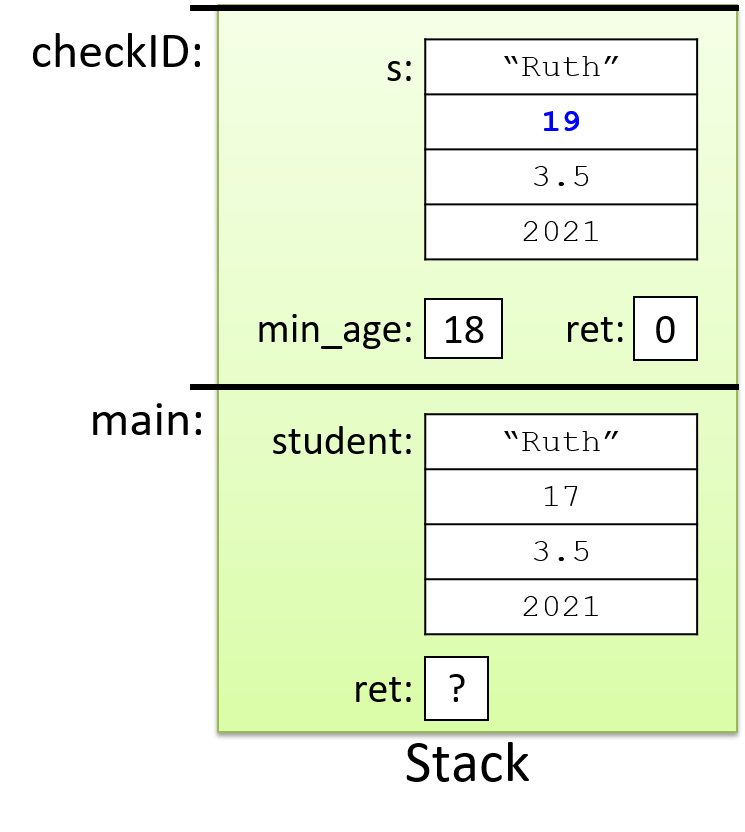

For the example preceding program, at the point in its execution right before max

executes the return statement, the execution stack will look like

Figure 6. Recall that the argument values to max passed by

main are passed by value, meaning that the parameters to max, x and

y, are assigned the values of their corresponding arguments, a and b from

the call in main. Despite the max function changing the value of x, the

change doesn’t affect the value of a in main.

The following full program includes two functions and shows examples of calling

them from the main function. In this program, we declare function prototypes

for max and print_table above the main function so that main can access them

despite being defined first. The main function contains the high-level steps

of the full program, and defining it first echoes the top-down design of the

program. This example includes comments describing the parts of the program

that are important to functions and function calls. You can also download and

run the full program.

/* This file shows examples of defining and calling C functions.

* It also demonstrates using scanf().

*/

#include <stdio.h>

/* This is an example of a FUNCTION PROTOTYPE. It declares just the type

* information for a function (the function's name, return type, and parameter

* list). A prototype is used when code in main wants to call the function

* before its full definition appears in the file.

*/

int max(int n1, int n2);

/* A prototype for another function. void is the return type of a function

* that does not return a value

*/

void print_table(int start, int stop);